Training

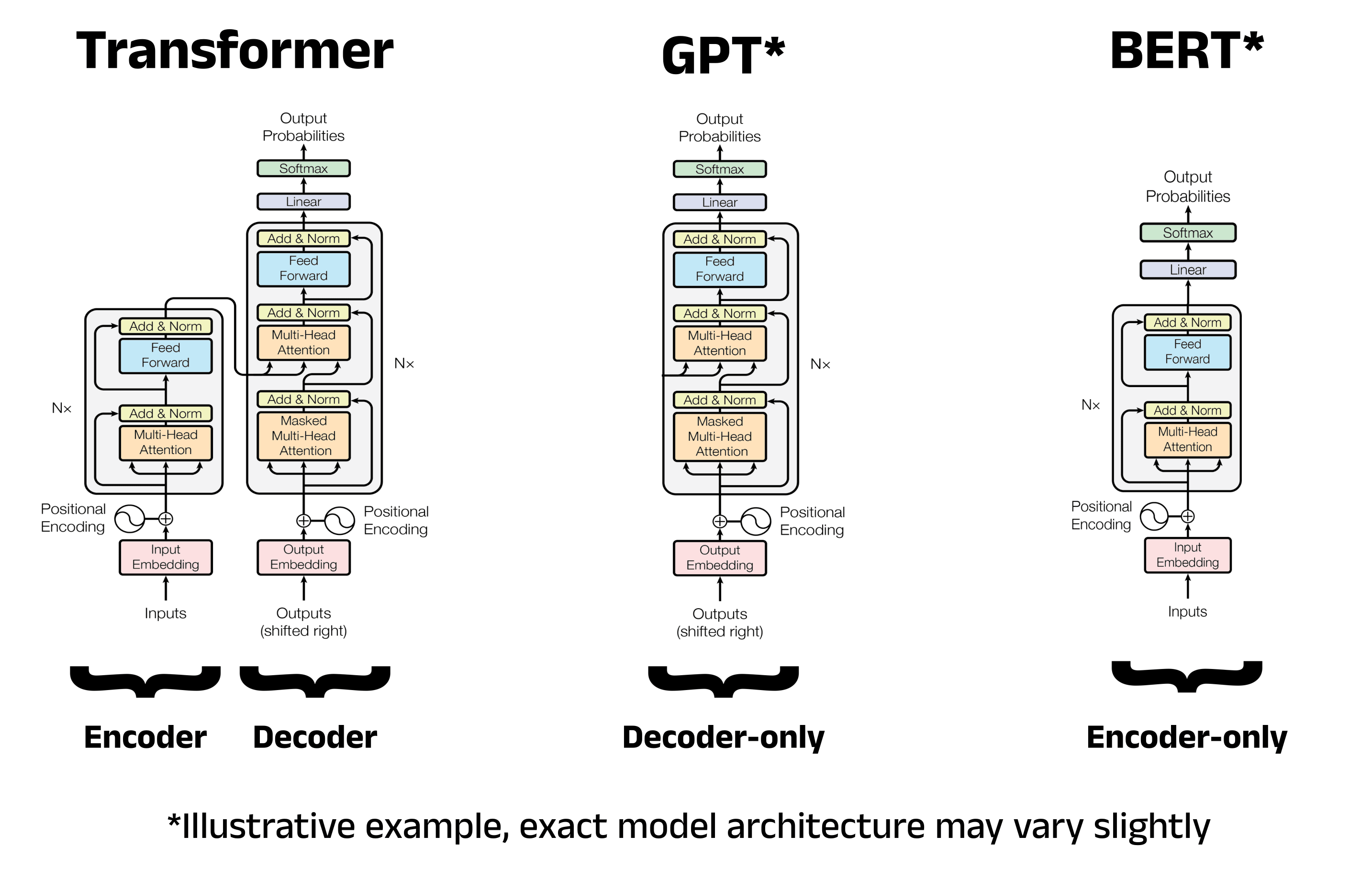

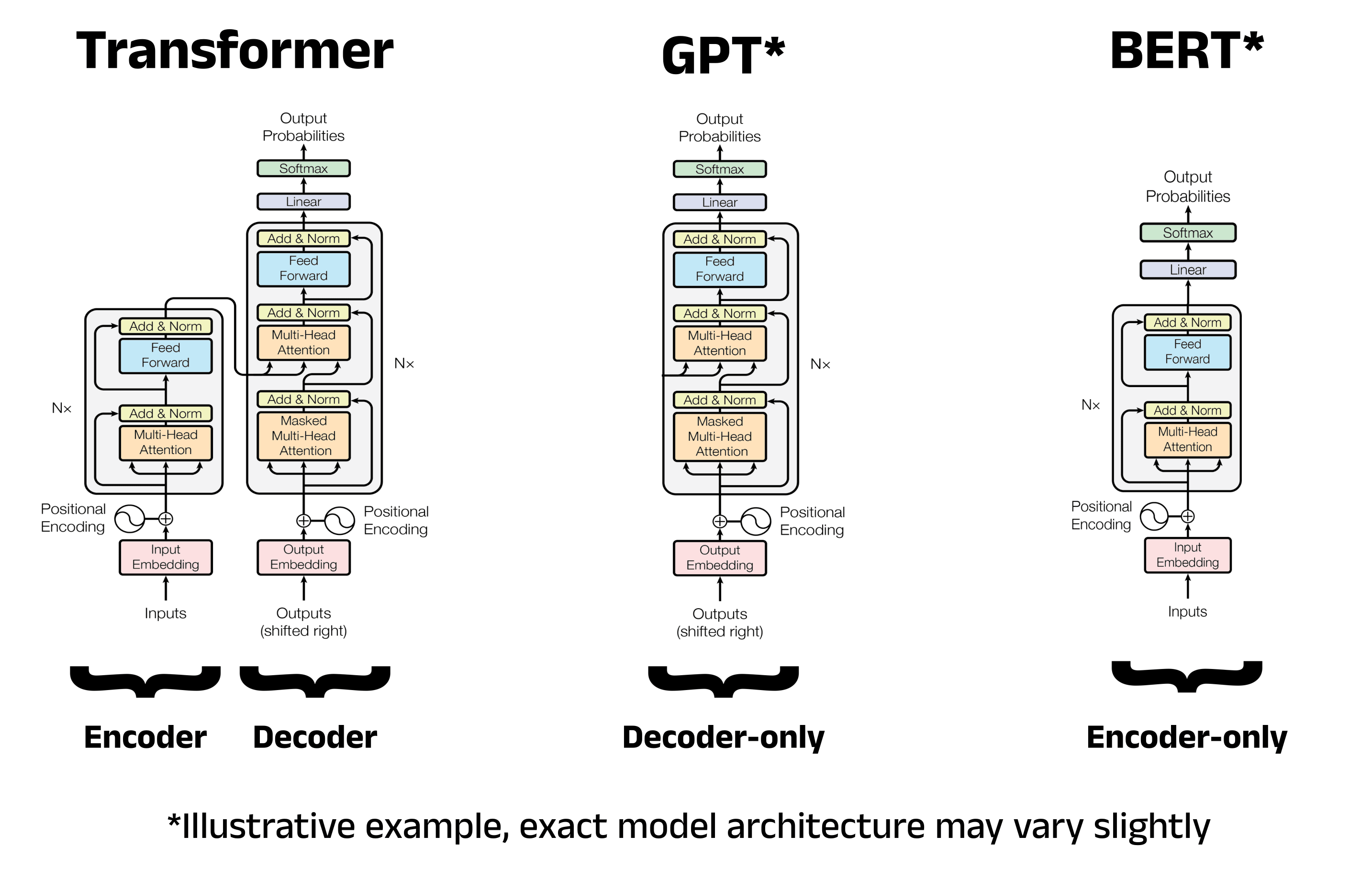

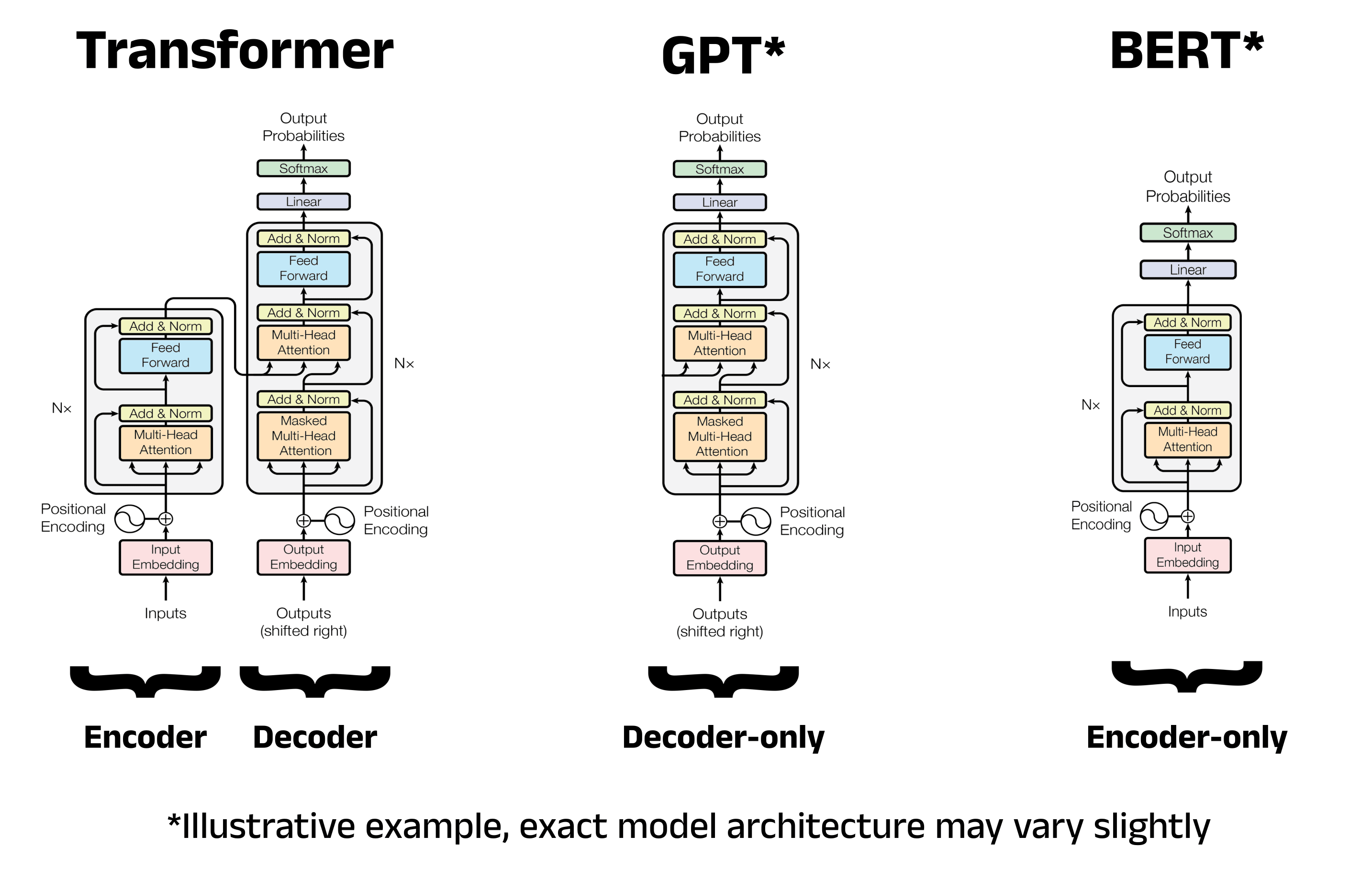

1 The Transformer Archictecture

- Model Composition:

- Encoder:

- Receives input and builds its representation (features).

- Optimized for understanding the input.

- Decoder:

- Uses the encoder’s representation (features) and other inputs to generate a target sequence.

- Optimized for generating outputs.

- Encoder:

- Usage:

- Encoder-only models:

- Suitable for tasks requiring input understanding (e.g., sentence classification, named entity recognition).

- Decoder-only models:

- Suitable for generative tasks (e.g., text generation).

- Encoder-decoder models (sequence-to-sequence

models):

- Suitable for generative tasks that require an input (e.g., translation, summarization).

- Encoder-only models:

1.1 Attention layers

Attention layers are integral to the Transformer architecture. The paper introducing the Transformer was titled “Attention Is All You Need,” highlighting the importance of attention layers.

Function of attention layers: These layers direct the model to focus on specific words in a sentence, while downplaying the importance of others.

Contextual meaning: The meaning of a word depends not only on the word itself but also on its context, which includes other words around it.

1.1.1 The original architecture

The Transformer architecture was initially designed for translation.

- Encoder:

- Receives inputs (sentences) in a certain language during training.

- Attention layers can use all the words in a sentence, considering both preceding and following words.

- Decoder:

- Receives the same sentences in the desired target language.

- Works sequentially, paying attention only to the words that have already been translated (i.e., the preceding words).

- For instance, after predicting the first three words of the translated target, the decoder uses these words and the inputs from the encoder to predict the fourth word.

- Initial Embedding Lookup:

- The raw embeddings for each token are context-independent.

- Example: The same embedding is used for “bank” whether it refers to a financial institution or a riverbank.

- Transformer Layers:

- After the initial embedding lookup, token embeddings (e.g., 768-dimensional vectors) are passed through the transformer’s self-attention layers.

- These layers enable the model to attend to other tokens in the sequence, capturing relationships and interactions between words.

- Context-Sensitive Representations:

- As the token embeddings pass through multiple transformer layers, each token’s representation becomes context-sensitive based on surrounding words.

1.2 Architectures vs. checkpoints

- Architecture:

- Refers to the skeleton of the model, defining each layer and operation within it.

- Checkpoints:

- Weights that are loaded into a given architecture.

- Model:

- An umbrella term that can refer to both architecture and checkpoints.

- Example:

- BERT is an architecture.

- bert-base-cased, a set of weights trained by the Google team for the first release of BERT, is a checkpoint.

- The term “model” can be used to refer to both the architecture (e.g., “BERT model”) and the checkpoint (e.g., “bert-base-cased model”).

1.2.1 Decoder models

Decoder models use only the decoder part of a Transformer model.

Attention mechanism: At each stage, the attention layers can only access the words that are positioned before the current word in the sentence.

These models are often referred to as auto-regressive models.

Pretraining: Typically focuses on predicting the next word in a sentence.

Best suited for: Tasks involving text generation.

Examples of decoder models:

- CTRL

- GPT

- GPT-2

- Transformer XL

Step 1: Input Embedding Converts words into numerical vectors:

Text: "The cat eats"

↓

Tokens: [The] [cat] [eats]

↓

Each becomes a dense vector (e.g., 768 numbers)

[The] → [0.23, -0.45, 0.67, ..., 0.12]Step 2: Positional Encoding Adds position information since transformers don’t inherently understand word order:

Position 0: Gets encoding vector

Position 1: Gets different encoding vector

Position 2: Gets another different encoding vector

Final = Word Embedding + Position EncodingStep 3: Transformer Block (repeated N times)

3.1 - Masked Multi-Head Attention

The Causal Mask:

When processing "eats" at position 2:

Can see: [The] [cat] [eats]

Cannot see: [the] [mouse] (future tokens)

Attention mask matrix:

The cat eats the mouse

The [ ✓ ✗ ✗ ✗ ✗ ]

cat [ ✓ ✓ ✗ ✗ ✗ ]

eats [ ✓ ✓ ✓ ✗ ✗ ]

the [ ✓ ✓ ✓ ✓ ✗ ]

mouse [ ✓ ✓ ✓ ✓ ✓ ]

(✓ = can see, ✗ = blocked)Why “Multi-Head”? Having multiple attention heads (e.g., 12) allows the model to focus on different aspects simultaneously: - Head 1: Subject-verb relationships - Head 2: Semantic meaning - Head 3: Long-range dependencies - etc.

3.2 - Add & Norm Two important techniques: - Residual Connection: Adds the input back to the output (prevents information loss in deep networks) - Layer Normalization: Stabilizes the numbers to prevent them from getting too large or small

Think of it as: “Keep the original information and just add the new insights”

3.3 - Feed Forward Network A simple neural network applied to each position independently: - Expands the representation (768 → 3072 dimensions) - Applies non-linear transformation - Compresses back (3072 → 768 dimensions)

This allows the model to process the attended information and extract higher-level features.

Step 4: Stacking Layers

These blocks repeat many times: - GPT-2: 12-48 layers - GPT-3: 96 layers

Each layer refines understanding: - Early layers:

Grammar, syntax, word relationships - Middle layers:

Meaning, context, semantic relationships

- Late layers: Abstract reasoning, global context

Step 5: Output Prediction

The final layer produces probabilities for the next word:

Current text: "The cat eats"

Probability for next word:

[the] : 0.001

[a] : 0.089

[fish] : 0.156

[mice] : 0.234 ← Most likely

[quickly]: 0.078

...

1.2.2 Encoder models

Encoder models use only the encoder part of a Transformer model.

Attention mechanism: At each stage, the attention layers can access all the words in the sentence.

These models are characterized by bi-directional attention and are often referred to as auto-encoding models. Processes text bidirectionally - can see both past and future words. Designed for understanding, not generation.

Pretraining: Typically involves corrupting a sentence (e.g., by masking random words) and tasking the model with reconstructing the original sentence.

Best suited for: Tasks requiring a full understanding of the sentence, such as:

- Sentence classification

- Named entity recognition (word classification)

- Extractive question answering

Examples of encoder models:

- ALBERT

- BERT

- DistilBERT

- ELECTRA

- RoBERTa

Step 1: Input Embedding Similar to GPT, but adds special tokens:

Text: "The cat eats the mouse"

↓

Tokens: [CLS] [The] [cat] [eats] [the] [mouse] [SEP]

[CLS] = Classification token (for sentence-level tasks)

[SEP] = Separator (for sentence pairs)Step 2: Positional Encoding Same as GPT - adds position information.

Step 3: Transformer Block

3.1 - Multi-Head Attention (NO MASK)

KEY DIFFERENCE: Bidirectional attention

When processing "cat" at position 2:

Can see: [CLS] [The] [cat] [eats] [the] [mouse] [SEP]

↑ ↑ ↑ ↑ ↑ ↑ ↑

ALL tokens visible (past AND future)

Attention matrix (no masking):

CLS The cat eats the mouse SEP

CLS [ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ]

The [ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ]

cat [ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ] ← Can see everything!

eats [ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ]

the [ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ]

mouse [ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ]

SEP [ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ]Example attention for “eats”:

Can attend to:

- "cat" (subject before): 0.35

- "mouse" (object after): 0.40 ← Can see future!

- "the" (determiner after): 0.15

- others: 0.10The model understands full context from both directions, making it better at understanding what words mean in context.

3.2 - Add & Norm Same as GPT - residual connections and normalization.

3.3 - Feed Forward Same as GPT - expand, transform, compress.

Step 4: Stacking Layers - BERT-base: 12 layers - BERT-large: 24 layers

Each layer builds deeper understanding of the full sentence context.

Step 5: Task-Specific Output

No autoregressive generation!

BERT produces a rich representation for each token:

[CLS] → vector ← Used for sentence classification

[The] → vector ← Used for word-level tasks

[cat] → vector ← Used for named entity recognition

[eats] → vector

...

1.2.3 Sequence-to-sequence models

Encoder-decoder models (also known as sequence-to-sequence models) use both parts of the Transformer architecture.

Attention mechanism:

- Encoder: The attention layers can access all the words in the input sentence.

- Decoder: The attention layers can only access the words positioned before the current word in the input.

Best suited for: Tasks that involve generating new sentences based on a given input, such as:

- Summarization

- Translation

- Generative question answering

Examples of encoder-decoder models:

- BART

- mBART

- Marian

- T5

2 Fine-tuning a pretrained model

2.1 Processing the data

Here is a first small example:

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification

from torch.optim import AdamW

checkpoint = "bert-base-uncased"

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

model = AutoModelForSequenceClassification.from_pretrained(checkpoint)

sequences = [

"I've been waiting for a HuggingFace course my whole life.",

"This course is amazing!",

]

batch = tokenizer(sequences, padding=True, truncation=True, return_tensors="pt")

batch["labels"] = torch.tensor([1, 1]) # Set labels, here both sequence are labelled as 1

optimizer = AdamW(model.parameters())

loss = model(**batch).loss

loss.backward()

optimizer.step()2.2 Loading a dataset from the Hub

The Hub contain models multiple datasets in lots of different languages.(https://huggingface.co/datasets)

MRPC dataset: This is one of the 10 datasets composing the GLUE benchmark, which is an academic benchmark that is used to measure the performance of ML models across 10 different text classification tasks.

from datasets import load_dataset

raw_datasets = load_dataset("glue", "mrpc")

raw_datasets

## DatasetDict({

## train: Dataset({

## features: ['sentence1', 'sentence2', 'label', 'idx'],

## num_rows: 3668

## })

## validation: Dataset({

## features: ['sentence1', 'sentence2', 'label', 'idx'],

## num_rows: 408

## })

## test: Dataset({

## features: ['sentence1', 'sentence2', 'label', 'idx'],

## num_rows: 1725

## })

## })raw_train_dataset = raw_datasets["train"]

raw_train_dataset[0]

## {'sentence1': 'Amrozi accused his brother , whom he called " the witness " , of deliberately distorting his evidence .', 'sentence2': 'Referring to him as only " the witness " , Amrozi accused his brother of deliberately distorting his evidence .', 'label': 1, 'idx': 0}raw_train_dataset.features

## {'sentence1': Value('string'), 'sentence2': Value('string'), 'label': ClassLabel(names=['not_equivalent', 'equivalent']), 'idx': Value('int32')}raw_datasets["train"]["sentence1"][:3]

## ['Amrozi accused his brother , whom he called " the witness " , of deliberately distorting his evidence .', "Yucaipa owned Dominick 's before selling the chain to Safeway in 1998 for $ 2.5 billion .", 'They had published an advertisement on the Internet on June 10 , offering the cargo for sale , he added .']3 Preprocessing a dataset

To preprocess the dataset, we need to convert the text to numbers the model can make sense of. This is done with a tokenizer. We can feed the tokenizer one sentence or a list of sentences, so we can directly tokenize all the first sentences and all the second sentences of each pair like this:

from transformers import AutoTokenizer

checkpoint = "bert-base-uncased"

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

tokenized_sentences_1 = tokenizer(list(raw_datasets["train"]["sentence1"]))

tokenized_sentences_2 = tokenizer(list(raw_datasets["train"]["sentence2"]))- A direct input of two sequences to the model won’t yield a prediction for whether the sentences are paraphrases.

- The two sequences must be handled as a pair and preprocessed appropriately.

- The tokenizer can accept a pair of sequences and process them in the format required by the BERT model.

inputs = tokenizer("This is the first sentence.", "This is the second one.")

inputs

## {'input_ids': [101, 2023, 2003, 1996, 2034, 6251, 1012, 102, 2023, 2003, 1996, 2117, 2028, 1012, 102], 'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1], 'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]}tokenizer.convert_ids_to_tokens(inputs["input_ids"])

## ['[CLS]', 'this', 'is', 'the', 'first', 'sentence', '.', '[SEP]', 'this', 'is', 'the', 'second', 'one', '.', '[SEP]']- Token Type IDs:

- Parts of the input corresponding to

[CLS] sentence1 [SEP]have a token type ID of 0. - Parts of the input corresponding to

sentence2 [SEP]have a token type ID of 1.

- Parts of the input corresponding to

- Handling Token Type IDs:

- Generally, there’s no need to worry about token_type_ids in tokenized inputs.

- As long as the tokenizer and model use the same checkpoint, the tokenizer will correctly provide the required information.

- Tokenizing a Dataset:

- The tokenizer can handle a list of sentence pairs by taking separate lists for the first and second sentences.

- This approach is compatible with padding and truncation options.

tokenized_dataset = tokenizer(

list(raw_datasets["train"]["sentence1"]),

list(raw_datasets["train"]["sentence2"]),

padding=True,

truncation=True,

)- The initial method works well but has some limitations:

- Returns a dictionary with specific keys (

input_ids,attention_mask,token_type_ids) and values as lists of lists. - Requires sufficient RAM to store the entire dataset during tokenization.

- In contrast, 🤗 Datasets library datasets are Apache Arrow files, stored on disk, so only the requested samples are loaded in memory.

- Returns a dictionary with specific keys (

- To address these limitations and retain the dataset format:

- Use

Dataset.map()method. - This method allows for extra preprocessing beyond tokenization.

map()applies a function to each element in the dataset, enabling customized tokenization functions.

- Use

def tokenize_function(example):

return tokenizer(example["sentence1"], example["sentence2"], truncation=True)tokenize_function( raw_datasets["train"][0])

## {'input_ids': [101, 2572, 3217, 5831, 5496, 2010, 2567, 1010, 3183, 2002, 2170, 1000, 1996, 7409, 1000, 1010, 1997, 9969, 4487, 23809, 3436, 2010, 3350, 1012, 102, 7727, 2000, 2032, 2004, 2069, 1000, 1996, 7409, 1000, 1010, 2572, 3217, 5831, 5496, 2010, 2567, 1997, 9969, 4487, 23809, 3436, 2010, 3350, 1012, 102], 'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1], 'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]}- The function takes a dictionary as input (similar to dataset items)

and returns a new dictionary with the keys:

input_idsattention_masktoken_type_ids

- The function can handle multiple samples simultaneously:

- Each key can contain a list of sentences.

- This allows for using

batched=Truein themap()call, enhancing tokenization speed by processing multiple samples at once.

- Padding optimization:

- Padding is excluded from the function to avoid inefficiency.

- Instead, padding is applied when building a batch, so only the maximum length in each batch is padded, not across the entire dataset.

- This strategy saves time and processing power, especially with variable-length inputs.

- Application on datasets:

- The tokenization function is applied to all datasets at once using

batched=Truewithmap(). - The

Datasetslibrary adds new fields to each dataset based on the keys in the returned dictionary for efficient preprocessing.

- The tokenization function is applied to all datasets at once using

tokenized_datasets = raw_datasets.map(tokenize_function, batched=True)

tokenized_datasets

## DatasetDict({

## train: Dataset({

## features: ['sentence1', 'sentence2', 'label', 'idx', 'input_ids', 'token_type_ids', 'attention_mask'],

## num_rows: 3668

## })

## validation: Dataset({

## features: ['sentence1', 'sentence2', 'label', 'idx', 'input_ids', 'token_type_ids', 'attention_mask'],

## num_rows: 408

## })

## test: Dataset({

## features: ['sentence1', 'sentence2', 'label', 'idx', 'input_ids', 'token_type_ids', 'attention_mask'],

## num_rows: 1725

## })

## })- Tokenize Function Output:

- Returns a dictionary with the following keys:

input_idsattention_masktoken_type_ids

- These fields are added to all splits of the dataset.

- Returns a dictionary with the following keys:

- Customization with map():

- Possible to modify existing fields in the dataset by returning new values for an existing key in the preprocessing function.

4 With your own Dataset

import pandas as pd

df = pd.read_csv('Data/text1995.csv')

data_wang = pd.read_json('Data/1995.json')

data_wang['score'] = data_wang['items_wang_3_restricted50'].apply(lambda x: x['score']['novelty'] )

df = pd.merge(df[['id','text']],data_wang[['id','score']],on = 'id', how = 'inner')

num_positive = df[df['score'] > 0].shape[0]

positive_score_subset = df[df['score'] > 0]

positive_score_subset['score'] = 1

zero_score_subset = df[df['score'] == 0].sample(n=num_positive, random_state=42)

balanced_df = pd.concat([positive_score_subset, zero_score_subset])

balanced_df = balanced_df.sample(frac=1, random_state=24).reset_index(drop=True)

balanced_df['score'] = balanced_df['score'].astype(int)

from sklearn.model_selection import train_test_split

balanced_df = balanced_df[['id','score', 'text']].set_index('id')

train_df, temp_df = train_test_split(balanced_df, test_size=0.4, random_state=42)

valid_df, test_df = train_test_split(temp_df, test_size=0.5, random_state=42)

from datasets import Dataset, DatasetDict, load_dataset

train_dataset = Dataset.from_pandas(train_df)

valid_dataset = Dataset.from_pandas(valid_df)

test_dataset = Dataset.from_pandas(test_df)

datasets = DatasetDict({

'train': train_dataset,

'validation': valid_dataset,

'test':test_dataset

})

datasets.save_to_disk('Data/test_novelty')4.1 Dynamic padding

- Collate Function:

- The collate function organizes samples within a batch in a DataLoader.

- Default behavior: Converts samples to PyTorch tensors and concatenates them, handling lists, tuples, or dictionaries recursively.

- Limitation: This approach won’t work if inputs vary in size.

- Batch Padding Strategy:

- Padding is deliberately applied only as needed for each batch to minimize excessive padding.

- Benefits: Speeds up training by reducing over-long inputs.

- Custom Collate Function with Padding:

- A custom collate function applies appropriate padding to batch items.

- Transformers library provides

DataCollatorWithPaddingfor this purpose.- Requires a tokenizer to handle padding tokens and specify left or right padding as needed by the model.

from transformers import DataCollatorWithPadding

data_collator = DataCollatorWithPadding(tokenizer=tokenizer)samples = tokenized_datasets["train"][:8]

samples = {k: v for k, v in samples.items() if k not in ["idx", "sentence1", "sentence2"]}

[len(x) for x in samples["input_ids"]]

## [50, 59, 47, 67, 59, 50, 62, 32]- Samples have varying lengths, ranging from 32 to 67.

- Dynamic padding: Pads samples in a batch to the maximum length within that batch (67 in this case).

- Without dynamic padding: Would require padding all samples to the maximum length across the entire dataset or to the model’s maximum acceptable length.

- A check on

data_collatorconfirms proper application of dynamic padding for the batch.

batch = data_collator(samples)

{k: v.shape for k, v in batch.items()}

## {'input_ids': torch.Size([8, 67]), 'token_type_ids': torch.Size([8, 67]), 'attention_mask': torch.Size([8, 67]), 'labels': torch.Size([8])}5 Fine-tuning a model with the Trainer API

- Trainer Class in Transformers:

- The Trainer class is provided by Transformers for fine-tuning pretrained models on custom datasets.

- After data preprocessing, only a few steps are needed to define the Trainer.

- Setting Up the Training Environment:

- Running

Trainer.train()on a CPU is very slow; a GPU is recommended. - Google Colab offers access to free GPUs and TPUs for faster training.

- Running

from datasets import load_dataset

from transformers import AutoTokenizer, DataCollatorWithPadding

raw_datasets = load_dataset("glue", "mrpc")

checkpoint = "bert-base-uncased"

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

def tokenize_function(example):

return tokenizer(example["sentence1"], example["sentence2"], truncation=True)

tokenized_datasets = raw_datasets.map(tokenize_function, batched=True)

data_collator = DataCollatorWithPadding(tokenizer=tokenizer)5.1 Training

- Define TrainingArguments:

- Before defining the Trainer, set up a

TrainingArgumentsclass. - This class will include all the necessary hyperparameters for training and evaluation.

- Before defining the Trainer, set up a

- Required Argument:

- Specify a directory where:

- The trained model will be saved.

- Checkpoints will be stored during training.

- Specify a directory where:

- Default Settings:

- Defaults for other parameters are generally sufficient for basic fine-tuning.

from transformers import TrainingArguments

training_args = TrainingArguments("test-trainer0")from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification.from_pretrained(checkpoint, num_labels=2)- Warning on Model Instantiation:

- A warning appears after loading the pretrained BERT model.

- BERT was not pretrained for sentence pair classification, so the original model head is discarded.

- A new head for sequence classification is added, causing:

- Some weights to be unused (from the discarded pretraining head).

- Some weights to be randomly initialized (for the new classification head).

- The warning suggests training the model to optimize the new head.

- Defining a Trainer:

- The Trainer requires the following components:

- The modified model (with a new head).

training_args: settings and configurations for the training process.trainingandvalidationdatasets.data_collator: a function to collate batches of data.tokenizer: to process text inputs.

- The Trainer requires the following components:

from transformers import Trainer

trainer = Trainer(

model,

training_args,

train_dataset=tokenized_datasets["train"],

eval_dataset=tokenized_datasets["validation"],

data_collator=data_collator,

tokenizer=tokenizer,

)

## <string>:2: FutureWarning: `tokenizer` is deprecated and will be removed in version 5.0.0 for `Trainer.__init__`. Use `processing_class` instead.- To fine-tune the model on our dataset, we just have to call the train() method of our Trainer

- The fine-tuning process will begin, which should take a few minutes on a GPU.

- Training loss will be reported every 500 steps.

- However, model performance (quality) is not assessed due to:

- Lack of evaluation strategy:

evaluation_strategywas not set to “steps” (evaluate everyeval_steps) or “epoch” (evaluate at the end of each epoch).

- Absence of

compute_metrics()function:- Without this, no metrics are calculated during evaluation; only the loss would be printed, which is not very informative.

- Lack of evaluation strategy:

5.2 Evaluation

Goal: Build a

compute_metrics()function to use during model training.Function Requirements:

- Accepts an

EvalPredictionobject (a named tuple with:predictionsfieldlabel_idsfield)

- Returns a dictionary:

- Keys are metric names (strings)

- Values are metric values (floats)

- Accepts an

Usage:

- Use

Trainer.predict()to generate model predictions.

- Use

predictions = trainer.predict(tokenized_datasets["validation"])

## /Users/peltouz/Documents/GitHub/M2-Py-DS2E/hf/lib/python3.13/site-packages/torch/utils/data/dataloader.py:692: UserWarning: 'pin_memory' argument is set as true but not supported on MPS now, device pinned memory won't be used.

## warnings.warn(warn_msg)

## 0%| | 0/51 [00:00<?, ?it/s] 8%|7 | 4/51 [00:00<00:01, 37.61it/s] 16%|#5 | 8/51 [00:00<00:01, 29.76it/s] 24%|##3 | 12/51 [00:00<00:01, 28.28it/s] 29%|##9 | 15/51 [00:00<00:01, 28.21it/s] 35%|###5 | 18/51 [00:00<00:01, 27.94it/s] 43%|####3 | 22/51 [00:00<00:00, 29.14it/s] 51%|##### | 26/51 [00:00<00:00, 32.07it/s] 59%|#####8 | 30/51 [00:00<00:00, 32.63it/s] 67%|######6 | 34/51 [00:01<00:00, 32.85it/s] 75%|#######4 | 38/51 [00:01<00:00, 34.71it/s] 82%|########2 | 42/51 [00:01<00:00, 36.03it/s] 90%|######### | 46/51 [00:01<00:00, 34.48it/s] 98%|#########8| 50/51 [00:01<00:00, 34.54it/s]100%|##########| 51/51 [00:01<00:00, 32.58it/s]

print(predictions.predictions.shape, predictions.label_ids.shape)

## (408, 2) (408,)- predict() method output:

- Returns a named tuple with three fields:

- predictions:

- A 2D array with shape 408 x 2 (for 408 elements in the dataset).

- Contains logits for each element, which need to be transformed to make predictions.

- Transformation process: select the index with the maximum value on the second axis.

- label_ids: Stores the labels for comparison.

- metrics:

- Initially includes:

- Loss on the dataset passed.

- Time metrics (total and average prediction time).

- When

compute_metrics()is defined and passed toTrainer,metricsalso includes the metrics returned bycompute_metrics().

- Initially includes:

- predictions:

- Returns a named tuple with three fields:

import numpy as np

preds = np.argmax(predictions.predictions, axis=-1)- We can now compare those preds to the labels.

- To build our compute_metric() function, we will rely on the metrics from the Evaluate library.

- We can load the metrics associated with the MRPC dataset as easily as we loaded the dataset, this time with the evaluate.load() function.

- The object returned has a compute() method we can use to do the metric calculation:

import evaluate

metric = evaluate.load("glue", "mrpc")

metric.compute(predictions=preds, references=predictions.label_ids)

## {'accuracy': 0.6838235294117647, 'f1': 0.8122270742358079}

print("Preds:", preds[:10])

## Preds: [1 1 1 1 1 1 1 1 1 1]

print("Labels:", predictions.label_ids[:10])

## Labels: [1 0 0 1 0 1 0 1 1 1]

print("Preds type:", type(preds[0]))

## Preds type: <class 'numpy.int64'>

print("Labels type:", type(predictions.label_ids[0]))

## Labels type: <class 'numpy.int64'>

print("Unique values in preds:", np.unique(preds))

## Unique values in preds: [1]

print("Unique values in labels:", np.unique(predictions.label_ids))

## Unique values in labels: [0 1]def compute_metrics(eval_preds):

metric = evaluate.load("glue", "mrpc")

logits, labels = eval_preds

predictions = np.argmax(logits, axis=-1)

return metric.compute(predictions=predictions, references=labels)

device = torch.device("mps") if torch.backends.mps.is_available() else torch.device("cpu")

training_args = TrainingArguments("test-trainer", eval_strategy="epoch")

model = AutoModelForSequenceClassification.from_pretrained(checkpoint, num_labels=2)

model.to(device)

## BertForSequenceClassification(

## (bert): BertModel(

## (embeddings): BertEmbeddings(

## (word_embeddings): Embedding(30522, 768, padding_idx=0)

## (position_embeddings): Embedding(512, 768)

## (token_type_embeddings): Embedding(2, 768)

## (LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

## (dropout): Dropout(p=0.1, inplace=False)

## )

## (encoder): BertEncoder(

## (layer): ModuleList(

## (0-11): 12 x BertLayer(

## (attention): BertAttention(

## (self): BertSdpaSelfAttention(

## (query): Linear(in_features=768, out_features=768, bias=True)

## (key): Linear(in_features=768, out_features=768, bias=True)

## (value): Linear(in_features=768, out_features=768, bias=True)

## (dropout): Dropout(p=0.1, inplace=False)

## )

## (output): BertSelfOutput(

## (dense): Linear(in_features=768, out_features=768, bias=True)

## (LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

## (dropout): Dropout(p=0.1, inplace=False)

## )

## )

## (intermediate): BertIntermediate(

## (dense): Linear(in_features=768, out_features=3072, bias=True)

## (intermediate_act_fn): GELUActivation()

## )

## (output): BertOutput(

## (dense): Linear(in_features=3072, out_features=768, bias=True)

## (LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

## (dropout): Dropout(p=0.1, inplace=False)

## )

## )

## )

## )

## (pooler): BertPooler(

## (dense): Linear(in_features=768, out_features=768, bias=True)

## (activation): Tanh()

## )

## )

## (dropout): Dropout(p=0.1, inplace=False)

## (classifier): Linear(in_features=768, out_features=2, bias=True)

## )

trainer = Trainer(

model,

training_args,

train_dataset=tokenized_datasets["train"],

eval_dataset=tokenized_datasets["validation"],

data_collator=data_collator,

tokenizer=tokenizer,

compute_metrics=compute_metrics,

)

## <string>:2: FutureWarning: `tokenizer` is deprecated and will be removed in version 5.0.0 for `Trainer.__init__`. Use `processing_class` instead.- New TrainingArguments:

- A new

TrainingArgumentsobject is created. - The

evaluation_strategyparameter is set to"epoch".

- A new

- New Model:

- A new model is instantiated for training.

- This prevents continuing training on an already trained model.

- To launch a new training run, we execute:

trainer.train()

## 0%| | 0/1377 [00:00<?, ?it/s]/Users/peltouz/Documents/GitHub/M2-Py-DS2E/hf/lib/python3.13/site-packages/torch/utils/data/dataloader.py:692: UserWarning: 'pin_memory' argument is set as true but not supported on MPS now, device pinned memory won't be used.

## warnings.warn(warn_msg)

## 0%| | 1/1377 [00:00<04:01, 5.69it/s] 0%| | 2/1377 [00:00<03:37, 6.33it/s] 0%| | 3/1377 [00:00<03:09, 7.26it/s] 0%| | 4/1377 [00:00<02:56, 7.76it/s] 0%| | 5/1377 [00:00<02:56, 7.78it/s] 0%| | 6/1377 [00:00<02:52, 7.94it/s] 1%| | 8/1377 [00:01<02:36, 8.72it/s] 1%| | 9/1377 [00:01<02:37, 8.68it/s] 1%| | 10/1377 [00:01<02:39, 8.57it/s] 1%| | 11/1377 [00:01<02:34, 8.83it/s] 1%| | 12/1377 [00:01<02:32, 8.93it/s] 1%|1 | 14/1377 [00:01<02:28, 9.17it/s] 1%|1 | 15/1377 [00:01<02:29, 9.11it/s] 1%|1 | 16/1377 [00:01<02:31, 9.00it/s] 1%|1 | 17/1377 [00:02<02:29, 9.07it/s] 1%|1 | 18/1377 [00:02<02:28, 9.14it/s] 1%|1 | 19/1377 [00:02<02:33, 8.87it/s] 1%|1 | 20/1377 [00:02<02:31, 8.93it/s] 2%|1 | 21/1377 [00:02<02:39, 8.50it/s] 2%|1 | 22/1377 [00:02<02:48, 8.07it/s] 2%|1 | 23/1377 [00:02<02:41, 8.37it/s] 2%|1 | 24/1377 [00:02<02:51, 7.89it/s] 2%|1 | 25/1377 [00:02<02:50, 7.93it/s] 2%|1 | 26/1377 [00:03<02:42, 8.29it/s] 2%|1 | 27/1377 [00:03<02:43, 8.23it/s] 2%|2 | 28/1377 [00:03<02:47, 8.06it/s] 2%|2 | 29/1377 [00:03<02:42, 8.32it/s] 2%|2 | 30/1377 [00:03<02:48, 7.99it/s] 2%|2 | 31/1377 [00:03<02:42, 8.27it/s] 2%|2 | 32/1377 [00:03<02:43, 8.23it/s] 2%|2 | 33/1377 [00:03<02:38, 8.46it/s] 2%|2 | 34/1377 [00:04<02:34, 8.68it/s] 3%|2 | 35/1377 [00:04<02:32, 8.82it/s] 3%|2 | 36/1377 [00:04<02:35, 8.61it/s] 3%|2 | 37/1377 [00:04<02:31, 8.87it/s] 3%|2 | 38/1377 [00:04<02:30, 8.92it/s] 3%|2 | 39/1377 [00:04<02:30, 8.90it/s] 3%|2 | 41/1377 [00:04<02:22, 9.40it/s] 3%|3 | 42/1377 [00:04<02:26, 9.11it/s] 3%|3 | 43/1377 [00:05<02:33, 8.69it/s] 3%|3 | 44/1377 [00:05<02:33, 8.67it/s] 3%|3 | 45/1377 [00:05<02:30, 8.86it/s] 3%|3 | 46/1377 [00:05<02:28, 8.95it/s] 3%|3 | 48/1377 [00:05<02:21, 9.40it/s] 4%|3 | 49/1377 [00:05<02:24, 9.17it/s] 4%|3 | 50/1377 [00:05<02:26, 9.08it/s] 4%|3 | 51/1377 [00:05<02:22, 9.31it/s] 4%|3 | 52/1377 [00:06<02:22, 9.27it/s] 4%|3 | 53/1377 [00:06<02:20, 9.43it/s] 4%|3 | 54/1377 [00:06<02:21, 9.36it/s] 4%|4 | 56/1377 [00:06<02:17, 9.59it/s] 4%|4 | 57/1377 [00:06<02:20, 9.36it/s] 4%|4 | 58/1377 [00:06<02:23, 9.19it/s] 4%|4 | 59/1377 [00:06<02:23, 9.16it/s] 4%|4 | 61/1377 [00:06<02:19, 9.41it/s] 5%|4 | 62/1377 [00:07<02:24, 9.13it/s] 5%|4 | 63/1377 [00:07<02:24, 9.12it/s] 5%|4 | 64/1377 [00:07<02:22, 9.18it/s] 5%|4 | 65/1377 [00:07<02:24, 9.07it/s] 5%|4 | 66/1377 [00:07<02:22, 9.18it/s] 5%|4 | 67/1377 [00:07<02:22, 9.22it/s] 5%|4 | 68/1377 [00:07<02:20, 9.29it/s] 5%|5 | 69/1377 [00:07<02:20, 9.28it/s] 5%|5 | 70/1377 [00:07<02:18, 9.45it/s] 5%|5 | 72/1377 [00:08<02:15, 9.61it/s] 5%|5 | 74/1377 [00:08<02:13, 9.78it/s] 5%|5 | 75/1377 [00:08<02:17, 9.50it/s] 6%|5 | 76/1377 [00:08<02:17, 9.48it/s] 6%|5 | 77/1377 [00:08<02:16, 9.52it/s] 6%|5 | 78/1377 [00:08<02:17, 9.48it/s] 6%|5 | 79/1377 [00:08<02:17, 9.45it/s] 6%|5 | 81/1377 [00:09<02:14, 9.67it/s] 6%|5 | 82/1377 [00:09<02:16, 9.46it/s] 6%|6 | 83/1377 [00:09<02:16, 9.46it/s] 6%|6 | 84/1377 [00:09<02:15, 9.53it/s] 6%|6 | 85/1377 [00:09<02:14, 9.57it/s] 6%|6 | 86/1377 [00:09<02:14, 9.60it/s] 6%|6 | 87/1377 [00:09<02:31, 8.49it/s] 6%|6 | 88/1377 [00:09<02:33, 8.40it/s] 6%|6 | 89/1377 [00:10<02:32, 8.43it/s] 7%|6 | 90/1377 [00:10<02:26, 8.81it/s] 7%|6 | 91/1377 [00:10<02:21, 9.09it/s] 7%|6 | 93/1377 [00:10<02:15, 9.47it/s] 7%|6 | 94/1377 [00:10<02:14, 9.50it/s] 7%|6 | 96/1377 [00:10<02:11, 9.76it/s] 7%|7 | 97/1377 [00:10<02:11, 9.77it/s] 7%|7 | 98/1377 [00:10<02:13, 9.60it/s] 7%|7 | 99/1377 [00:11<02:12, 9.62it/s] 7%|7 | 100/1377 [00:11<02:12, 9.60it/s] 7%|7 | 101/1377 [00:11<02:12, 9.61it/s] 7%|7 | 103/1377 [00:11<02:10, 9.73it/s] 8%|7 | 104/1377 [00:11<02:24, 8.84it/s] 8%|7 | 105/1377 [00:11<02:22, 8.94it/s] 8%|7 | 106/1377 [00:11<02:20, 9.02it/s] 8%|7 | 107/1377 [00:11<02:21, 9.00it/s] 8%|7 | 109/1377 [00:12<02:11, 9.63it/s] 8%|7 | 110/1377 [00:12<02:12, 9.54it/s] 8%|8 | 111/1377 [00:12<02:12, 9.53it/s] 8%|8 | 112/1377 [00:12<02:12, 9.55it/s] 8%|8 | 113/1377 [00:12<02:13, 9.50it/s] 8%|8 | 114/1377 [00:12<02:14, 9.41it/s] 8%|8 | 115/1377 [00:12<02:16, 9.27it/s] 8%|8 | 116/1377 [00:12<02:15, 9.32it/s] 8%|8 | 117/1377 [00:12<02:13, 9.45it/s] 9%|8 | 118/1377 [00:13<02:13, 9.46it/s] 9%|8 | 119/1377 [00:13<02:12, 9.46it/s] 9%|8 | 120/1377 [00:13<02:16, 9.19it/s] 9%|8 | 121/1377 [00:13<02:15, 9.24it/s] 9%|8 | 122/1377 [00:13<02:15, 9.23it/s] 9%|8 | 123/1377 [00:13<02:22, 8.79it/s] 9%|9 | 124/1377 [00:13<02:21, 8.84it/s] 9%|9 | 125/1377 [00:13<02:24, 8.68it/s] 9%|9 | 127/1377 [00:14<02:18, 9.03it/s] 9%|9 | 128/1377 [00:14<02:28, 8.40it/s] 9%|9 | 129/1377 [00:14<02:26, 8.53it/s] 9%|9 | 130/1377 [00:14<02:22, 8.74it/s] 10%|9 | 131/1377 [00:14<02:28, 8.39it/s] 10%|9 | 132/1377 [00:14<02:36, 7.97it/s] 10%|9 | 133/1377 [00:14<02:29, 8.31it/s] 10%|9 | 134/1377 [00:14<02:26, 8.50it/s] 10%|9 | 135/1377 [00:15<02:21, 8.78it/s] 10%|9 | 136/1377 [00:15<02:16, 9.07it/s] 10%|9 | 137/1377 [00:15<02:15, 9.12it/s] 10%|# | 139/1377 [00:15<02:11, 9.39it/s] 10%|# | 140/1377 [00:15<02:17, 8.99it/s] 10%|# | 141/1377 [00:15<02:20, 8.79it/s] 10%|# | 142/1377 [00:15<02:16, 9.05it/s] 10%|# | 143/1377 [00:15<02:16, 9.03it/s] 11%|# | 145/1377 [00:16<02:11, 9.34it/s] 11%|# | 146/1377 [00:16<02:11, 9.39it/s] 11%|# | 147/1377 [00:16<02:10, 9.44it/s] 11%|# | 149/1377 [00:16<02:10, 9.41it/s] 11%|# | 150/1377 [00:16<02:10, 9.40it/s] 11%|# | 151/1377 [00:16<02:11, 9.32it/s] 11%|#1 | 152/1377 [00:16<02:13, 9.20it/s] 11%|#1 | 153/1377 [00:16<02:14, 9.09it/s] 11%|#1 | 154/1377 [00:17<02:12, 9.24it/s] 11%|#1 | 155/1377 [00:17<02:19, 8.78it/s] 11%|#1 | 156/1377 [00:17<02:14, 9.09it/s] 11%|#1 | 157/1377 [00:17<02:15, 9.02it/s] 11%|#1 | 158/1377 [00:17<02:14, 9.05it/s] 12%|#1 | 160/1377 [00:17<02:05, 9.73it/s] 12%|#1 | 162/1377 [00:17<02:04, 9.75it/s] 12%|#1 | 163/1377 [00:18<02:04, 9.74it/s] 12%|#1 | 164/1377 [00:18<02:04, 9.77it/s] 12%|#1 | 165/1377 [00:18<02:05, 9.66it/s] 12%|#2 | 166/1377 [00:18<02:07, 9.47it/s] 12%|#2 | 167/1377 [00:18<02:08, 9.38it/s] 12%|#2 | 168/1377 [00:18<02:06, 9.52it/s] 12%|#2 | 169/1377 [00:18<02:07, 9.51it/s] 12%|#2 | 170/1377 [00:18<02:06, 9.53it/s] 12%|#2 | 171/1377 [00:18<02:08, 9.35it/s] 12%|#2 | 172/1377 [00:18<02:06, 9.49it/s] 13%|#2 | 174/1377 [00:19<02:01, 9.92it/s] 13%|#2 | 176/1377 [00:19<02:00, 9.96it/s] 13%|#2 | 177/1377 [00:19<02:01, 9.86it/s] 13%|#2 | 178/1377 [00:19<02:02, 9.78it/s] 13%|#2 | 179/1377 [00:19<02:03, 9.69it/s] 13%|#3 | 180/1377 [00:19<02:04, 9.58it/s] 13%|#3 | 181/1377 [00:19<02:04, 9.59it/s] 13%|#3 | 183/1377 [00:20<02:06, 9.46it/s] 13%|#3 | 184/1377 [00:20<02:06, 9.45it/s] 13%|#3 | 185/1377 [00:20<02:05, 9.47it/s] 14%|#3 | 186/1377 [00:20<02:05, 9.47it/s] 14%|#3 | 187/1377 [00:20<02:04, 9.57it/s] 14%|#3 | 188/1377 [00:20<02:04, 9.56it/s] 14%|#3 | 189/1377 [00:20<02:05, 9.44it/s] 14%|#3 | 190/1377 [00:20<02:06, 9.39it/s] 14%|#3 | 191/1377 [00:20<02:05, 9.45it/s] 14%|#3 | 192/1377 [00:21<02:21, 8.38it/s] 14%|#4 | 193/1377 [00:21<02:15, 8.76it/s] 14%|#4 | 194/1377 [00:21<02:15, 8.76it/s] 14%|#4 | 195/1377 [00:21<02:15, 8.74it/s] 14%|#4 | 196/1377 [00:21<02:11, 8.98it/s] 14%|#4 | 197/1377 [00:21<02:08, 9.16it/s] 14%|#4 | 199/1377 [00:21<02:04, 9.43it/s] 15%|#4 | 200/1377 [00:21<02:05, 9.41it/s] 15%|#4 | 202/1377 [00:22<02:02, 9.58it/s] 15%|#4 | 203/1377 [00:22<02:02, 9.57it/s] 15%|#4 | 204/1377 [00:22<02:03, 9.49it/s] 15%|#4 | 206/1377 [00:22<02:00, 9.74it/s] 15%|#5 | 207/1377 [00:22<02:02, 9.55it/s] 15%|#5 | 208/1377 [00:22<02:03, 9.47it/s] 15%|#5 | 209/1377 [00:22<02:05, 9.33it/s] 15%|#5 | 210/1377 [00:23<02:05, 9.27it/s] 15%|#5 | 211/1377 [00:23<02:06, 9.19it/s] 15%|#5 | 212/1377 [00:23<02:09, 8.97it/s] 16%|#5 | 214/1377 [00:23<02:02, 9.46it/s] 16%|#5 | 215/1377 [00:23<02:03, 9.41it/s] 16%|#5 | 216/1377 [00:23<02:03, 9.40it/s] 16%|#5 | 217/1377 [00:23<02:03, 9.37it/s] 16%|#5 | 219/1377 [00:23<02:00, 9.60it/s] 16%|#5 | 220/1377 [00:24<02:04, 9.27it/s] 16%|#6 | 222/1377 [00:24<02:01, 9.50it/s] 16%|#6 | 223/1377 [00:24<02:02, 9.44it/s] 16%|#6 | 224/1377 [00:24<02:01, 9.45it/s] 16%|#6 | 226/1377 [00:24<01:55, 9.93it/s] 16%|#6 | 227/1377 [00:24<01:57, 9.79it/s] 17%|#6 | 228/1377 [00:24<02:03, 9.27it/s] 17%|#6 | 230/1377 [00:25<02:01, 9.47it/s] 17%|#6 | 231/1377 [00:25<02:00, 9.49it/s] 17%|#6 | 232/1377 [00:25<02:00, 9.47it/s] 17%|#6 | 233/1377 [00:25<02:01, 9.45it/s] 17%|#6 | 234/1377 [00:25<02:04, 9.20it/s] 17%|#7 | 235/1377 [00:25<02:03, 9.22it/s] 17%|#7 | 236/1377 [00:25<02:02, 9.30it/s] 17%|#7 | 238/1377 [00:25<01:58, 9.59it/s] 17%|#7 | 239/1377 [00:26<01:58, 9.57it/s] 17%|#7 | 240/1377 [00:26<01:58, 9.57it/s] 18%|#7 | 241/1377 [00:26<02:08, 8.83it/s] 18%|#7 | 242/1377 [00:26<02:08, 8.82it/s] 18%|#7 | 243/1377 [00:26<02:08, 8.86it/s] 18%|#7 | 244/1377 [00:26<02:08, 8.82it/s] 18%|#7 | 246/1377 [00:26<02:01, 9.30it/s] 18%|#7 | 247/1377 [00:26<02:01, 9.29it/s] 18%|#8 | 248/1377 [00:27<02:02, 9.24it/s] 18%|#8 | 249/1377 [00:27<02:02, 9.20it/s] 18%|#8 | 250/1377 [00:27<02:01, 9.27it/s] 18%|#8 | 251/1377 [00:27<02:00, 9.34it/s] 18%|#8 | 252/1377 [00:27<02:03, 9.12it/s] 18%|#8 | 253/1377 [00:27<02:02, 9.19it/s] 18%|#8 | 254/1377 [00:27<02:03, 9.12it/s] 19%|#8 | 255/1377 [00:27<02:02, 9.14it/s] 19%|#8 | 257/1377 [00:28<01:58, 9.46it/s] 19%|#8 | 258/1377 [00:28<01:58, 9.47it/s] 19%|#8 | 259/1377 [00:28<01:57, 9.52it/s] 19%|#8 | 260/1377 [00:28<01:55, 9.64it/s] 19%|#8 | 261/1377 [00:28<01:58, 9.45it/s] 19%|#9 | 262/1377 [00:28<01:58, 9.40it/s] 19%|#9 | 263/1377 [00:28<01:59, 9.35it/s] 19%|#9 | 264/1377 [00:28<01:58, 9.41it/s] 19%|#9 | 265/1377 [00:28<02:02, 9.09it/s] 19%|#9 | 266/1377 [00:29<02:00, 9.26it/s] 19%|#9 | 267/1377 [00:29<01:59, 9.32it/s] 19%|#9 | 268/1377 [00:29<02:00, 9.21it/s] 20%|#9 | 269/1377 [00:29<01:59, 9.24it/s] 20%|#9 | 270/1377 [00:29<02:00, 9.22it/s] 20%|#9 | 271/1377 [00:29<02:01, 9.12it/s] 20%|#9 | 272/1377 [00:29<02:00, 9.18it/s] 20%|#9 | 274/1377 [00:29<01:56, 9.45it/s] 20%|#9 | 275/1377 [00:29<01:56, 9.47it/s] 20%|## | 276/1377 [00:30<01:56, 9.45it/s] 20%|## | 277/1377 [00:30<01:56, 9.43it/s] 20%|## | 278/1377 [00:30<01:56, 9.40it/s] 20%|## | 279/1377 [00:30<01:57, 9.31it/s] 20%|## | 280/1377 [00:30<01:58, 9.24it/s] 20%|## | 281/1377 [00:30<01:57, 9.31it/s] 21%|## | 283/1377 [00:30<01:56, 9.36it/s] 21%|## | 284/1377 [00:30<02:00, 9.10it/s] 21%|## | 286/1377 [00:31<01:55, 9.42it/s] 21%|## | 287/1377 [00:31<01:55, 9.44it/s] 21%|## | 288/1377 [00:31<01:54, 9.47it/s] 21%|## | 289/1377 [00:31<01:55, 9.42it/s] 21%|##1 | 290/1377 [00:31<01:55, 9.40it/s] 21%|##1 | 291/1377 [00:31<01:54, 9.46it/s] 21%|##1 | 292/1377 [00:31<01:54, 9.49it/s] 21%|##1 | 293/1377 [00:31<01:54, 9.48it/s] 21%|##1 | 294/1377 [00:32<01:59, 9.08it/s] 21%|##1 | 296/1377 [00:32<01:54, 9.47it/s] 22%|##1 | 297/1377 [00:32<01:53, 9.52it/s] 22%|##1 | 299/1377 [00:32<01:51, 9.66it/s] 22%|##1 | 300/1377 [00:32<01:52, 9.60it/s] 22%|##1 | 301/1377 [00:32<02:04, 8.67it/s] 22%|##1 | 302/1377 [00:32<02:02, 8.81it/s] 22%|##2 | 303/1377 [00:33<02:05, 8.57it/s] 22%|##2 | 304/1377 [00:33<02:00, 8.91it/s] 22%|##2 | 305/1377 [00:33<01:57, 9.13it/s] 22%|##2 | 306/1377 [00:33<01:55, 9.31it/s] 22%|##2 | 307/1377 [00:33<01:53, 9.42it/s] 22%|##2 | 308/1377 [00:33<01:52, 9.50it/s] 22%|##2 | 309/1377 [00:33<01:53, 9.37it/s] 23%|##2 | 311/1377 [00:33<01:47, 9.89it/s] 23%|##2 | 312/1377 [00:33<01:48, 9.82it/s] 23%|##2 | 313/1377 [00:34<01:48, 9.82it/s] 23%|##2 | 314/1377 [00:34<01:50, 9.59it/s] 23%|##2 | 315/1377 [00:34<01:52, 9.48it/s] 23%|##2 | 316/1377 [00:34<01:56, 9.14it/s] 23%|##3 | 317/1377 [00:34<01:54, 9.25it/s] 23%|##3 | 318/1377 [00:34<01:53, 9.37it/s] 23%|##3 | 319/1377 [00:34<02:07, 8.33it/s] 23%|##3 | 320/1377 [00:34<02:02, 8.62it/s] 23%|##3 | 321/1377 [00:34<01:59, 8.82it/s] 23%|##3 | 322/1377 [00:35<02:01, 8.72it/s] 23%|##3 | 323/1377 [00:35<01:59, 8.83it/s] 24%|##3 | 325/1377 [00:35<01:53, 9.24it/s] 24%|##3 | 326/1377 [00:35<01:54, 9.16it/s] 24%|##3 | 327/1377 [00:35<01:56, 9.00it/s] 24%|##3 | 328/1377 [00:35<01:56, 9.02it/s] 24%|##3 | 329/1377 [00:35<01:55, 9.04it/s] 24%|##3 | 330/1377 [00:35<01:53, 9.21it/s] 24%|##4 | 332/1377 [00:36<01:50, 9.44it/s] 24%|##4 | 333/1377 [00:36<01:50, 9.41it/s] 24%|##4 | 334/1377 [00:36<01:51, 9.39it/s] 24%|##4 | 335/1377 [00:36<01:58, 8.81it/s] 24%|##4 | 336/1377 [00:36<02:00, 8.65it/s] 24%|##4 | 337/1377 [00:36<01:57, 8.84it/s] 25%|##4 | 338/1377 [00:36<01:56, 8.94it/s] 25%|##4 | 339/1377 [00:36<01:57, 8.80it/s] 25%|##4 | 340/1377 [00:37<01:55, 8.97it/s] 25%|##4 | 342/1377 [00:37<01:49, 9.48it/s] 25%|##4 | 343/1377 [00:37<01:49, 9.42it/s] 25%|##4 | 344/1377 [00:37<01:49, 9.43it/s] 25%|##5 | 345/1377 [00:37<01:52, 9.16it/s] 25%|##5 | 346/1377 [00:37<01:51, 9.26it/s] 25%|##5 | 347/1377 [00:37<01:53, 9.08it/s] 25%|##5 | 348/1377 [00:37<01:51, 9.19it/s] 25%|##5 | 349/1377 [00:38<01:54, 8.96it/s] 25%|##5 | 350/1377 [00:38<01:54, 8.94it/s] 25%|##5 | 351/1377 [00:38<01:53, 9.02it/s] 26%|##5 | 352/1377 [00:38<01:52, 9.12it/s] 26%|##5 | 353/1377 [00:38<01:51, 9.18it/s] 26%|##5 | 355/1377 [00:38<01:48, 9.43it/s] 26%|##5 | 356/1377 [00:38<01:48, 9.43it/s] 26%|##5 | 357/1377 [00:38<01:47, 9.48it/s] 26%|##5 | 358/1377 [00:38<01:49, 9.34it/s] 26%|##6 | 359/1377 [00:39<01:48, 9.36it/s] 26%|##6 | 361/1377 [00:39<01:45, 9.64it/s] 26%|##6 | 362/1377 [00:39<01:45, 9.65it/s] 26%|##6 | 364/1377 [00:39<01:43, 9.82it/s] 27%|##6 | 365/1377 [00:39<01:43, 9.75it/s] 27%|##6 | 366/1377 [00:39<01:44, 9.65it/s] 27%|##6 | 367/1377 [00:39<01:45, 9.62it/s] 27%|##6 | 368/1377 [00:40<01:44, 9.61it/s] 27%|##6 | 369/1377 [00:40<01:44, 9.63it/s] 27%|##6 | 370/1377 [00:40<01:45, 9.50it/s] 27%|##6 | 371/1377 [00:40<01:45, 9.53it/s] 27%|##7 | 372/1377 [00:40<01:46, 9.42it/s] 27%|##7 | 373/1377 [00:40<01:47, 9.37it/s] 27%|##7 | 374/1377 [00:40<01:46, 9.41it/s] 27%|##7 | 375/1377 [00:40<01:50, 9.04it/s] 27%|##7 | 376/1377 [00:40<01:49, 9.16it/s] 27%|##7 | 377/1377 [00:40<01:49, 9.17it/s] 27%|##7 | 378/1377 [00:41<01:47, 9.25it/s] 28%|##7 | 379/1377 [00:41<01:50, 8.99it/s] 28%|##7 | 380/1377 [00:41<01:53, 8.79it/s] 28%|##7 | 381/1377 [00:41<01:51, 8.96it/s] 28%|##7 | 382/1377 [00:41<01:53, 8.79it/s] 28%|##7 | 383/1377 [00:41<01:50, 8.96it/s] 28%|##7 | 384/1377 [00:41<01:50, 8.95it/s] 28%|##7 | 385/1377 [00:41<01:50, 8.96it/s] 28%|##8 | 386/1377 [00:41<01:49, 9.04it/s] 28%|##8 | 387/1377 [00:42<01:47, 9.24it/s] 28%|##8 | 388/1377 [00:42<01:46, 9.32it/s] 28%|##8 | 389/1377 [00:42<01:45, 9.37it/s] 28%|##8 | 390/1377 [00:42<01:45, 9.37it/s] 28%|##8 | 391/1377 [00:42<01:47, 9.14it/s] 28%|##8 | 392/1377 [00:42<01:49, 9.03it/s] 29%|##8 | 394/1377 [00:42<01:47, 9.18it/s] 29%|##8 | 395/1377 [00:42<01:45, 9.31it/s] 29%|##8 | 396/1377 [00:43<01:46, 9.24it/s] 29%|##8 | 397/1377 [00:43<01:44, 9.35it/s] 29%|##8 | 398/1377 [00:43<01:44, 9.36it/s] 29%|##9 | 400/1377 [00:43<01:43, 9.47it/s] 29%|##9 | 401/1377 [00:43<01:42, 9.49it/s] 29%|##9 | 402/1377 [00:43<01:44, 9.37it/s] 29%|##9 | 403/1377 [00:43<01:44, 9.32it/s] 29%|##9 | 405/1377 [00:44<01:39, 9.79it/s] 29%|##9 | 406/1377 [00:44<01:41, 9.61it/s] 30%|##9 | 407/1377 [00:44<01:41, 9.59it/s] 30%|##9 | 408/1377 [00:44<01:41, 9.51it/s] 30%|##9 | 410/1377 [00:44<01:38, 9.77it/s] 30%|##9 | 411/1377 [00:44<01:39, 9.71it/s] 30%|##9 | 413/1377 [00:44<01:40, 9.58it/s] 30%|### | 414/1377 [00:44<01:40, 9.61it/s] 30%|### | 415/1377 [00:45<01:39, 9.65it/s] 30%|### | 416/1377 [00:45<01:39, 9.63it/s] 30%|### | 417/1377 [00:45<01:41, 9.48it/s] 30%|### | 418/1377 [00:45<01:43, 9.24it/s] 30%|### | 419/1377 [00:45<01:43, 9.28it/s] 31%|### | 420/1377 [00:45<01:42, 9.31it/s] 31%|### | 421/1377 [00:45<01:41, 9.44it/s] 31%|### | 422/1377 [00:45<01:40, 9.53it/s] 31%|### | 423/1377 [00:45<01:40, 9.52it/s] 31%|### | 425/1377 [00:46<01:38, 9.65it/s] 31%|### | 426/1377 [00:46<01:48, 8.77it/s] 31%|###1 | 427/1377 [00:46<01:45, 8.99it/s] 31%|###1 | 428/1377 [00:46<01:45, 9.02it/s] 31%|###1 | 429/1377 [00:46<01:47, 8.84it/s] 31%|###1 | 430/1377 [00:46<01:44, 9.06it/s] 31%|###1 | 431/1377 [00:46<01:44, 9.08it/s] 31%|###1 | 432/1377 [00:46<01:44, 9.02it/s] 31%|###1 | 433/1377 [00:47<01:47, 8.81it/s] 32%|###1 | 434/1377 [00:47<01:44, 9.06it/s] 32%|###1 | 436/1377 [00:47<01:42, 9.16it/s] 32%|###1 | 437/1377 [00:47<01:44, 9.03it/s] 32%|###1 | 439/1377 [00:47<01:42, 9.18it/s] 32%|###2 | 441/1377 [00:47<01:39, 9.39it/s] 32%|###2 | 442/1377 [00:47<01:40, 9.30it/s] 32%|###2 | 443/1377 [00:48<01:39, 9.35it/s] 32%|###2 | 444/1377 [00:48<01:39, 9.39it/s] 32%|###2 | 445/1377 [00:48<01:39, 9.37it/s] 32%|###2 | 447/1377 [00:48<01:36, 9.60it/s] 33%|###2 | 448/1377 [00:48<01:38, 9.47it/s] 33%|###2 | 450/1377 [00:48<01:36, 9.59it/s] 33%|###2 | 451/1377 [00:48<01:36, 9.56it/s] 33%|###2 | 452/1377 [00:49<01:38, 9.44it/s] 33%|###2 | 453/1377 [00:49<01:38, 9.40it/s] 33%|###2 | 454/1377 [00:49<01:37, 9.43it/s] 33%|###3 | 456/1377 [00:49<01:35, 9.66it/s] 33%|###3 | 457/1377 [00:49<01:35, 9.64it/s] 33%|###3 | 459/1377 [00:49<01:40, 9.11it/s]

## 0%| | 0/51 [00:00<?, ?it/s][A

## 10%|9 | 5/51 [00:00<00:01, 44.93it/s][A

## 20%|#9 | 10/51 [00:00<00:01, 38.12it/s][A

## 27%|##7 | 14/51 [00:00<00:00, 37.36it/s][A

## 35%|###5 | 18/51 [00:00<00:00, 35.23it/s][A

## 43%|####3 | 22/51 [00:00<00:00, 35.73it/s][A

## 51%|##### | 26/51 [00:00<00:00, 36.43it/s][A

## 59%|#####8 | 30/51 [00:00<00:00, 36.07it/s][A

## 67%|######6 | 34/51 [00:00<00:00, 36.88it/s][A

## 75%|#######4 | 38/51 [00:01<00:00, 36.80it/s][A

## 82%|########2 | 42/51 [00:01<00:00, 36.84it/s][A

## 90%|######### | 46/51 [00:01<00:00, 36.56it/s][A

## 98%|#########8| 50/51 [00:01<00:00, 36.57it/s][A

## [A{'eval_loss': 0.41917771100997925, 'eval_accuracy': 0.8382352941176471, 'eval_f1': 0.8885135135135135, 'eval_runtime': 2.2577, 'eval_samples_per_second': 180.719, 'eval_steps_per_second': 22.59, 'epoch': 1.0}

## 33%|###3 | 459/1377 [00:52<01:40, 9.11it/s]

## 100%|##########| 51/51 [00:02<00:00, 36.57it/s][A

## [A/Users/peltouz/Documents/GitHub/M2-Py-DS2E/hf/lib/python3.13/site-packages/torch/utils/data/dataloader.py:692: UserWarning: 'pin_memory' argument is set as true but not supported on MPS now, device pinned memory won't be used.

## warnings.warn(warn_msg)

## 33%|###3 | 460/1377 [00:52<09:22, 1.63it/s] 33%|###3 | 461/1377 [00:52<07:31, 2.03it/s] 34%|###3 | 463/1377 [00:52<05:05, 3.00it/s] 34%|###3 | 464/1377 [00:52<04:19, 3.51it/s] 34%|###3 | 465/1377 [00:52<03:39, 4.15it/s] 34%|###3 | 466/1377 [00:52<03:08, 4.84it/s] 34%|###3 | 467/1377 [00:52<02:44, 5.53it/s] 34%|###4 | 469/1377 [00:53<02:12, 6.85it/s] 34%|###4 | 471/1377 [00:53<01:56, 7.78it/s] 34%|###4 | 472/1377 [00:53<01:52, 8.07it/s] 34%|###4 | 474/1377 [00:53<01:45, 8.56it/s] 34%|###4 | 475/1377 [00:53<01:44, 8.67it/s] 35%|###4 | 476/1377 [00:53<01:41, 8.84it/s] 35%|###4 | 478/1377 [00:54<01:39, 9.02it/s] 35%|###4 | 479/1377 [00:54<01:39, 9.06it/s] 35%|###4 | 480/1377 [00:54<01:37, 9.16it/s] 35%|###4 | 481/1377 [00:54<01:38, 9.13it/s] 35%|###5 | 482/1377 [00:54<01:42, 8.74it/s] 35%|###5 | 483/1377 [00:54<01:40, 8.90it/s] 35%|###5 | 485/1377 [00:54<01:38, 9.07it/s] 35%|###5 | 486/1377 [00:54<01:37, 9.16it/s] 35%|###5 | 487/1377 [00:55<01:36, 9.20it/s] 35%|###5 | 488/1377 [00:55<01:35, 9.34it/s] 36%|###5 | 489/1377 [00:55<01:34, 9.40it/s] 36%|###5 | 491/1377 [00:55<01:30, 9.81it/s] 36%|###5 | 493/1377 [00:55<01:29, 9.83it/s] 36%|###5 | 494/1377 [00:55<01:30, 9.77it/s] 36%|###5 | 495/1377 [00:55<01:33, 9.40it/s] 36%|###6 | 496/1377 [00:55<01:35, 9.26it/s] 36%|###6 | 497/1377 [00:56<01:35, 9.25it/s] 36%|###6 | 498/1377 [00:56<01:33, 9.38it/s] 36%|###6 | 499/1377 [00:56<01:34, 9.27it/s] 36%|###6 | 500/1377 [00:56<01:33, 9.36it/s] {'loss': 0.5506, 'grad_norm': 18.350160598754883, 'learning_rate': 3.1880900508351494e-05, 'epoch': 1.09}

## 36%|###6 | 500/1377 [00:56<01:33, 9.36it/s] 36%|###6 | 501/1377 [00:57<05:00, 2.91it/s] 36%|###6 | 502/1377 [00:57<04:02, 3.60it/s] 37%|###6 | 503/1377 [00:57<03:18, 4.41it/s] 37%|###6 | 505/1377 [00:57<02:30, 5.81it/s] 37%|###6 | 506/1377 [00:57<02:16, 6.39it/s] 37%|###6 | 507/1377 [00:57<02:04, 7.01it/s] 37%|###6 | 509/1377 [00:58<01:48, 8.04it/s] 37%|###7 | 511/1377 [00:58<01:41, 8.55it/s] 37%|###7 | 512/1377 [00:58<01:40, 8.63it/s] 37%|###7 | 514/1377 [00:58<01:34, 9.09it/s] 37%|###7 | 515/1377 [00:58<01:34, 9.12it/s] 37%|###7 | 516/1377 [00:58<01:34, 9.08it/s] 38%|###7 | 517/1377 [00:59<01:34, 9.13it/s] 38%|###7 | 518/1377 [00:59<01:33, 9.22it/s] 38%|###7 | 519/1377 [00:59<01:33, 9.18it/s] 38%|###7 | 520/1377 [00:59<01:32, 9.24it/s] 38%|###7 | 521/1377 [00:59<01:32, 9.30it/s] 38%|###7 | 522/1377 [00:59<01:32, 9.29it/s] 38%|###7 | 523/1377 [00:59<01:31, 9.33it/s] 38%|###8 | 524/1377 [00:59<01:30, 9.42it/s] 38%|###8 | 525/1377 [00:59<01:37, 8.76it/s] 38%|###8 | 527/1377 [01:00<01:32, 9.17it/s] 38%|###8 | 528/1377 [01:00<01:31, 9.28it/s] 38%|###8 | 529/1377 [01:00<01:30, 9.35it/s] 38%|###8 | 530/1377 [01:00<01:31, 9.29it/s] 39%|###8 | 531/1377 [01:00<01:33, 9.00it/s] 39%|###8 | 532/1377 [01:00<01:31, 9.23it/s] 39%|###8 | 534/1377 [01:00<01:25, 9.84it/s] 39%|###8 | 535/1377 [01:00<01:27, 9.63it/s] 39%|###8 | 536/1377 [01:01<01:28, 9.47it/s] 39%|###9 | 538/1377 [01:01<01:23, 10.02it/s] 39%|###9 | 539/1377 [01:01<01:27, 9.62it/s] 39%|###9 | 540/1377 [01:01<01:27, 9.59it/s] 39%|###9 | 542/1377 [01:01<01:24, 9.92it/s] 39%|###9 | 543/1377 [01:01<01:25, 9.79it/s] 40%|###9 | 544/1377 [01:01<01:26, 9.63it/s] 40%|###9 | 545/1377 [01:01<01:26, 9.66it/s] 40%|###9 | 546/1377 [01:02<01:25, 9.68it/s] 40%|###9 | 547/1377 [01:02<01:26, 9.64it/s] 40%|###9 | 548/1377 [01:02<01:27, 9.42it/s] 40%|###9 | 549/1377 [01:02<01:28, 9.36it/s] 40%|###9 | 550/1377 [01:02<01:28, 9.35it/s] 40%|#### | 551/1377 [01:02<01:28, 9.39it/s] 40%|#### | 552/1377 [01:02<01:27, 9.46it/s] 40%|#### | 553/1377 [01:02<01:26, 9.57it/s] 40%|#### | 554/1377 [01:02<01:28, 9.26it/s] 40%|#### | 555/1377 [01:03<01:33, 8.75it/s] 40%|#### | 556/1377 [01:03<01:33, 8.78it/s] 40%|#### | 557/1377 [01:03<01:38, 8.30it/s] 41%|#### | 558/1377 [01:03<01:42, 8.02it/s] 41%|#### | 559/1377 [01:03<01:40, 8.14it/s] 41%|#### | 560/1377 [01:03<01:36, 8.45it/s] 41%|#### | 561/1377 [01:03<01:32, 8.78it/s] 41%|#### | 562/1377 [01:03<01:32, 8.84it/s] 41%|#### | 563/1377 [01:04<01:31, 8.94it/s] 41%|#### | 564/1377 [01:04<01:29, 9.07it/s] 41%|####1 | 565/1377 [01:04<01:28, 9.12it/s] 41%|####1 | 566/1377 [01:04<01:27, 9.24it/s] 41%|####1 | 567/1377 [01:04<01:27, 9.30it/s] 41%|####1 | 568/1377 [01:04<01:26, 9.35it/s] 41%|####1 | 569/1377 [01:04<01:26, 9.35it/s] 41%|####1 | 570/1377 [01:04<01:25, 9.39it/s] 41%|####1 | 571/1377 [01:04<01:25, 9.47it/s] 42%|####1 | 572/1377 [01:04<01:24, 9.50it/s] 42%|####1 | 573/1377 [01:05<01:24, 9.56it/s] 42%|####1 | 574/1377 [01:05<01:27, 9.16it/s] 42%|####1 | 575/1377 [01:05<01:26, 9.26it/s] 42%|####1 | 576/1377 [01:05<01:26, 9.30it/s] 42%|####1 | 577/1377 [01:05<01:29, 8.97it/s] 42%|####1 | 578/1377 [01:05<01:27, 9.15it/s] 42%|####2 | 579/1377 [01:05<01:26, 9.28it/s] 42%|####2 | 580/1377 [01:05<01:26, 9.16it/s] 42%|####2 | 581/1377 [01:05<01:26, 9.19it/s] 42%|####2 | 582/1377 [01:06<01:27, 9.12it/s] 42%|####2 | 583/1377 [01:06<01:26, 9.19it/s] 42%|####2 | 584/1377 [01:06<01:26, 9.19it/s] 42%|####2 | 585/1377 [01:06<01:24, 9.35it/s] 43%|####2 | 586/1377 [01:06<01:26, 9.10it/s] 43%|####2 | 587/1377 [01:06<01:27, 9.05it/s] 43%|####2 | 588/1377 [01:06<01:25, 9.22it/s] 43%|####2 | 589/1377 [01:06<01:24, 9.33it/s] 43%|####2 | 590/1377 [01:06<01:24, 9.37it/s] 43%|####2 | 591/1377 [01:07<01:24, 9.31it/s] 43%|####2 | 592/1377 [01:07<01:23, 9.43it/s] 43%|####3 | 594/1377 [01:07<01:20, 9.67it/s] 43%|####3 | 595/1377 [01:07<01:22, 9.48it/s] 43%|####3 | 596/1377 [01:07<01:23, 9.41it/s] 43%|####3 | 598/1377 [01:07<01:21, 9.61it/s] 44%|####3 | 599/1377 [01:07<01:22, 9.49it/s] 44%|####3 | 600/1377 [01:07<01:21, 9.52it/s] 44%|####3 | 601/1377 [01:08<01:24, 9.20it/s] 44%|####3 | 603/1377 [01:08<01:23, 9.22it/s] 44%|####3 | 604/1377 [01:08<01:24, 9.20it/s] 44%|####3 | 605/1377 [01:08<01:24, 9.16it/s] 44%|####4 | 606/1377 [01:08<01:23, 9.26it/s] 44%|####4 | 608/1377 [01:08<01:19, 9.70it/s] 44%|####4 | 609/1377 [01:08<01:19, 9.67it/s] 44%|####4 | 610/1377 [01:09<01:18, 9.72it/s] 44%|####4 | 611/1377 [01:09<01:20, 9.50it/s] 45%|####4 | 613/1377 [01:09<01:19, 9.66it/s] 45%|####4 | 614/1377 [01:09<01:19, 9.61it/s] 45%|####4 | 615/1377 [01:09<01:21, 9.40it/s] 45%|####4 | 616/1377 [01:09<01:21, 9.35it/s] 45%|####4 | 617/1377 [01:09<01:20, 9.42it/s] 45%|####4 | 618/1377 [01:09<01:20, 9.40it/s] 45%|####4 | 619/1377 [01:10<01:21, 9.30it/s] 45%|####5 | 620/1377 [01:10<01:21, 9.33it/s] 45%|####5 | 621/1377 [01:10<01:21, 9.27it/s] 45%|####5 | 622/1377 [01:10<01:21, 9.22it/s] 45%|####5 | 623/1377 [01:10<01:26, 8.76it/s] 45%|####5 | 624/1377 [01:10<01:24, 8.92it/s] 45%|####5 | 626/1377 [01:10<01:20, 9.37it/s] 46%|####5 | 627/1377 [01:10<01:22, 9.07it/s] 46%|####5 | 628/1377 [01:10<01:22, 9.13it/s] 46%|####5 | 629/1377 [01:11<01:21, 9.16it/s] 46%|####5 | 630/1377 [01:11<01:21, 9.17it/s] 46%|####5 | 631/1377 [01:11<01:22, 9.08it/s] 46%|####5 | 633/1377 [01:11<01:20, 9.20it/s] 46%|####6 | 634/1377 [01:11<01:21, 9.15it/s] 46%|####6 | 635/1377 [01:11<01:23, 8.89it/s] 46%|####6 | 636/1377 [01:11<01:22, 8.95it/s] 46%|####6 | 637/1377 [01:11<01:21, 9.07it/s] 46%|####6 | 638/1377 [01:12<01:21, 9.08it/s] 46%|####6 | 639/1377 [01:12<01:21, 9.11it/s] 46%|####6 | 640/1377 [01:12<01:19, 9.23it/s] 47%|####6 | 641/1377 [01:12<01:18, 9.32it/s] 47%|####6 | 642/1377 [01:12<01:19, 9.24it/s] 47%|####6 | 643/1377 [01:12<01:18, 9.41it/s] 47%|####6 | 644/1377 [01:12<01:23, 8.77it/s] 47%|####6 | 645/1377 [01:12<01:24, 8.64it/s] 47%|####6 | 647/1377 [01:13<01:19, 9.14it/s] 47%|####7 | 648/1377 [01:13<01:19, 9.16it/s] 47%|####7 | 649/1377 [01:13<01:19, 9.13it/s] 47%|####7 | 651/1377 [01:13<01:16, 9.48it/s] 47%|####7 | 652/1377 [01:13<01:17, 9.37it/s] 47%|####7 | 653/1377 [01:13<01:17, 9.36it/s] 47%|####7 | 654/1377 [01:13<01:18, 9.24it/s] 48%|####7 | 655/1377 [01:13<01:17, 9.34it/s] 48%|####7 | 657/1377 [01:14<01:12, 9.91it/s] 48%|####7 | 658/1377 [01:14<01:13, 9.75it/s] 48%|####7 | 659/1377 [01:14<01:14, 9.59it/s] 48%|####8 | 661/1377 [01:14<01:16, 9.38it/s] 48%|####8 | 662/1377 [01:14<01:16, 9.36it/s] 48%|####8 | 663/1377 [01:14<01:16, 9.37it/s] 48%|####8 | 665/1377 [01:14<01:14, 9.61it/s] 48%|####8 | 666/1377 [01:15<01:16, 9.32it/s] 48%|####8 | 667/1377 [01:15<01:16, 9.24it/s] 49%|####8 | 668/1377 [01:15<01:17, 9.20it/s] 49%|####8 | 669/1377 [01:15<01:17, 9.17it/s] 49%|####8 | 670/1377 [01:15<01:17, 9.17it/s] 49%|####8 | 671/1377 [01:15<01:17, 9.08it/s] 49%|####8 | 672/1377 [01:15<01:17, 9.13it/s] 49%|####8 | 673/1377 [01:15<01:16, 9.26it/s] 49%|####8 | 674/1377 [01:15<01:15, 9.31it/s] 49%|####9 | 675/1377 [01:16<01:16, 9.21it/s] 49%|####9 | 676/1377 [01:16<01:16, 9.15it/s] 49%|####9 | 677/1377 [01:16<01:16, 9.16it/s] 49%|####9 | 678/1377 [01:16<01:17, 9.07it/s] 49%|####9 | 679/1377 [01:16<01:18, 8.84it/s] 49%|####9 | 680/1377 [01:16<01:19, 8.77it/s] 49%|####9 | 681/1377 [01:16<01:17, 8.98it/s] 50%|####9 | 682/1377 [01:16<01:16, 9.13it/s] 50%|####9 | 683/1377 [01:16<01:16, 9.09it/s] 50%|####9 | 684/1377 [01:17<01:15, 9.14it/s] 50%|####9 | 685/1377 [01:17<01:15, 9.13it/s] 50%|####9 | 686/1377 [01:17<01:17, 8.88it/s] 50%|####9 | 687/1377 [01:17<01:16, 9.05it/s] 50%|####9 | 688/1377 [01:17<01:14, 9.20it/s] 50%|##### | 690/1377 [01:17<01:12, 9.44it/s] 50%|##### | 691/1377 [01:17<01:16, 8.99it/s] 50%|##### | 692/1377 [01:17<01:18, 8.68it/s] 50%|##### | 693/1377 [01:18<01:17, 8.79it/s] 50%|##### | 694/1377 [01:18<01:17, 8.86it/s] 50%|##### | 695/1377 [01:18<01:15, 9.06it/s] 51%|##### | 696/1377 [01:18<01:16, 8.85it/s] 51%|##### | 697/1377 [01:18<01:18, 8.64it/s] 51%|##### | 698/1377 [01:18<01:16, 8.89it/s] 51%|##### | 699/1377 [01:18<01:18, 8.66it/s] 51%|##### | 700/1377 [01:18<01:16, 8.81it/s] 51%|##### | 701/1377 [01:18<01:17, 8.77it/s] 51%|##### | 702/1377 [01:19<01:15, 8.89it/s] 51%|#####1 | 704/1377 [01:19<01:13, 9.22it/s] 51%|#####1 | 705/1377 [01:19<01:12, 9.27it/s] 51%|#####1 | 707/1377 [01:19<01:08, 9.76it/s] 51%|#####1 | 708/1377 [01:19<01:10, 9.44it/s] 51%|#####1 | 709/1377 [01:19<01:10, 9.43it/s] 52%|#####1 | 710/1377 [01:19<01:13, 9.11it/s] 52%|#####1 | 711/1377 [01:20<01:12, 9.19it/s] 52%|#####1 | 712/1377 [01:20<01:11, 9.36it/s] 52%|#####1 | 714/1377 [01:20<01:10, 9.46it/s] 52%|#####1 | 715/1377 [01:20<01:11, 9.29it/s] 52%|#####1 | 716/1377 [01:20<01:11, 9.29it/s] 52%|#####2 | 717/1377 [01:20<01:10, 9.34it/s] 52%|#####2 | 719/1377 [01:20<01:07, 9.70it/s] 52%|#####2 | 720/1377 [01:20<01:07, 9.68it/s] 52%|#####2 | 722/1377 [01:21<01:07, 9.71it/s] 53%|#####2 | 723/1377 [01:21<01:08, 9.53it/s] 53%|#####2 | 724/1377 [01:21<01:09, 9.46it/s] 53%|#####2 | 725/1377 [01:21<01:10, 9.28it/s] 53%|#####2 | 726/1377 [01:21<01:12, 8.96it/s] 53%|#####2 | 728/1377 [01:21<01:07, 9.59it/s] 53%|#####2 | 729/1377 [01:21<01:07, 9.59it/s] 53%|#####3 | 731/1377 [01:22<01:06, 9.66it/s] 53%|#####3 | 733/1377 [01:22<01:05, 9.84it/s] 53%|#####3 | 735/1377 [01:22<01:05, 9.85it/s] 53%|#####3 | 736/1377 [01:22<01:07, 9.53it/s] 54%|#####3 | 738/1377 [01:22<01:06, 9.60it/s] 54%|#####3 | 739/1377 [01:22<01:06, 9.56it/s] 54%|#####3 | 741/1377 [01:23<01:05, 9.73it/s] 54%|#####3 | 743/1377 [01:23<01:04, 9.79it/s] 54%|#####4 | 745/1377 [01:23<01:05, 9.65it/s] 54%|#####4 | 746/1377 [01:23<01:05, 9.61it/s] 54%|#####4 | 748/1377 [01:23<01:04, 9.72it/s] 54%|#####4 | 749/1377 [01:24<01:05, 9.63it/s] 55%|#####4 | 751/1377 [01:24<01:04, 9.72it/s] 55%|#####4 | 752/1377 [01:24<01:05, 9.57it/s] 55%|#####4 | 753/1377 [01:24<01:06, 9.41it/s] 55%|#####4 | 755/1377 [01:24<01:04, 9.64it/s] 55%|#####4 | 756/1377 [01:24<01:04, 9.60it/s] 55%|#####4 | 757/1377 [01:24<01:09, 8.97it/s] 55%|#####5 | 759/1377 [01:25<01:05, 9.45it/s] 55%|#####5 | 761/1377 [01:25<01:03, 9.65it/s] 55%|#####5 | 762/1377 [01:25<01:04, 9.59it/s] 55%|#####5 | 763/1377 [01:25<01:05, 9.40it/s] 55%|#####5 | 764/1377 [01:25<01:04, 9.48it/s] 56%|#####5 | 765/1377 [01:25<01:05, 9.32it/s] 56%|#####5 | 766/1377 [01:25<01:05, 9.35it/s] 56%|#####5 | 767/1377 [01:25<01:06, 9.23it/s] 56%|#####5 | 768/1377 [01:26<01:08, 8.96it/s] 56%|#####5 | 769/1377 [01:26<01:07, 9.01it/s] 56%|#####5 | 770/1377 [01:26<01:06, 9.12it/s] 56%|#####6 | 772/1377 [01:26<01:02, 9.65it/s] 56%|#####6 | 773/1377 [01:26<01:02, 9.61it/s] 56%|#####6 | 774/1377 [01:26<01:02, 9.60it/s] 56%|#####6 | 775/1377 [01:26<01:02, 9.61it/s] 56%|#####6 | 777/1377 [01:26<01:02, 9.61it/s] 57%|#####6 | 779/1377 [01:27<01:01, 9.66it/s] 57%|#####6 | 780/1377 [01:27<01:02, 9.62it/s] 57%|#####6 | 782/1377 [01:27<01:00, 9.80it/s] 57%|#####6 | 784/1377 [01:27<01:01, 9.65it/s] 57%|#####7 | 785/1377 [01:27<01:01, 9.55it/s] 57%|#####7 | 786/1377 [01:27<01:02, 9.52it/s] 57%|#####7 | 787/1377 [01:28<01:02, 9.47it/s] 57%|#####7 | 788/1377 [01:28<01:02, 9.42it/s] 57%|#####7 | 789/1377 [01:28<01:02, 9.43it/s] 57%|#####7 | 790/1377 [01:28<01:02, 9.45it/s] 57%|#####7 | 791/1377 [01:28<01:03, 9.30it/s] 58%|#####7 | 792/1377 [01:28<01:02, 9.35it/s] 58%|#####7 | 793/1377 [01:28<01:01, 9.43it/s] 58%|#####7 | 794/1377 [01:28<01:01, 9.52it/s] 58%|#####7 | 795/1377 [01:28<01:00, 9.60it/s] 58%|#####7 | 796/1377 [01:28<01:01, 9.48it/s] 58%|#####7 | 797/1377 [01:29<01:02, 9.30it/s] 58%|#####7 | 798/1377 [01:29<01:04, 8.96it/s] 58%|#####8 | 799/1377 [01:29<01:04, 8.97it/s] 58%|#####8 | 800/1377 [01:29<01:03, 9.16it/s] 58%|#####8 | 802/1377 [01:29<00:59, 9.62it/s] 58%|#####8 | 803/1377 [01:29<01:02, 9.22it/s] 58%|#####8 | 804/1377 [01:29<01:01, 9.26it/s] 58%|#####8 | 805/1377 [01:29<01:01, 9.31it/s] 59%|#####8 | 807/1377 [01:30<01:00, 9.42it/s] 59%|#####8 | 808/1377 [01:30<01:00, 9.37it/s] 59%|#####8 | 809/1377 [01:30<01:01, 9.30it/s] 59%|#####8 | 810/1377 [01:30<01:01, 9.29it/s] 59%|#####8 | 811/1377 [01:30<01:00, 9.35it/s] 59%|#####9 | 813/1377 [01:30<00:59, 9.55it/s] 59%|#####9 | 815/1377 [01:30<00:57, 9.69it/s] 59%|#####9 | 817/1377 [01:31<00:56, 9.83it/s] 59%|#####9 | 818/1377 [01:31<00:57, 9.73it/s] 59%|#####9 | 819/1377 [01:31<00:57, 9.67it/s] 60%|#####9 | 821/1377 [01:31<00:57, 9.63it/s] 60%|#####9 | 822/1377 [01:31<00:58, 9.50it/s] 60%|#####9 | 823/1377 [01:31<00:58, 9.45it/s] 60%|#####9 | 824/1377 [01:31<00:59, 9.33it/s] 60%|#####9 | 825/1377 [01:32<00:59, 9.31it/s] 60%|#####9 | 826/1377 [01:32<00:59, 9.29it/s] 60%|###### | 827/1377 [01:32<00:59, 9.29it/s] 60%|###### | 828/1377 [01:32<00:59, 9.19it/s] 60%|###### | 829/1377 [01:32<00:59, 9.24it/s] 60%|###### | 830/1377 [01:32<00:58, 9.30it/s] 60%|###### | 832/1377 [01:32<00:57, 9.56it/s] 61%|###### | 834/1377 [01:33<00:58, 9.24it/s] 61%|###### | 835/1377 [01:33<00:58, 9.34it/s] 61%|###### | 836/1377 [01:33<00:58, 9.26it/s] 61%|###### | 837/1377 [01:33<00:58, 9.26it/s] 61%|###### | 838/1377 [01:33<00:58, 9.26it/s] 61%|###### | 839/1377 [01:33<00:57, 9.32it/s] 61%|######1 | 840/1377 [01:33<00:57, 9.32it/s] 61%|######1 | 841/1377 [01:33<00:57, 9.34it/s] 61%|######1 | 842/1377 [01:33<00:57, 9.32it/s] 61%|######1 | 843/1377 [01:33<00:56, 9.37it/s] 61%|######1 | 844/1377 [01:34<00:56, 9.46it/s] 61%|######1 | 845/1377 [01:34<00:55, 9.52it/s] 61%|######1 | 846/1377 [01:34<00:56, 9.47it/s] 62%|######1 | 847/1377 [01:34<00:58, 9.05it/s] 62%|######1 | 848/1377 [01:34<00:57, 9.16it/s] 62%|######1 | 849/1377 [01:34<00:59, 8.91it/s] 62%|######1 | 850/1377 [01:34<00:58, 9.08it/s] 62%|######1 | 851/1377 [01:34<00:57, 9.20it/s] 62%|######1 | 852/1377 [01:34<00:57, 9.13it/s] 62%|######1 | 853/1377 [01:35<00:56, 9.26it/s] 62%|######2 | 854/1377 [01:35<00:56, 9.22it/s] 62%|######2 | 855/1377 [01:35<00:55, 9.33it/s] 62%|######2 | 856/1377 [01:35<00:58, 8.84it/s] 62%|######2 | 857/1377 [01:35<00:57, 9.11it/s] 62%|######2 | 858/1377 [01:35<00:58, 8.86it/s] 62%|######2 | 859/1377 [01:35<00:57, 9.06it/s] 62%|######2 | 860/1377 [01:35<00:56, 9.14it/s] 63%|######2 | 861/1377 [01:35<00:55, 9.24it/s] 63%|######2 | 862/1377 [01:36<00:56, 9.19it/s] 63%|######2 | 863/1377 [01:36<00:55, 9.28it/s] 63%|######2 | 865/1377 [01:36<00:54, 9.38it/s] 63%|######2 | 866/1377 [01:36<00:54, 9.45it/s] 63%|######2 | 867/1377 [01:36<00:55, 9.14it/s] 63%|######3 | 868/1377 [01:36<00:55, 9.24it/s] 63%|######3 | 870/1377 [01:36<00:54, 9.35it/s] 63%|######3 | 871/1377 [01:37<00:55, 9.13it/s] 63%|######3 | 872/1377 [01:37<00:55, 9.16it/s] 63%|######3 | 873/1377 [01:37<00:54, 9.20it/s] 63%|######3 | 874/1377 [01:37<00:54, 9.26it/s] 64%|######3 | 875/1377 [01:37<00:54, 9.25it/s] 64%|######3 | 876/1377 [01:37<00:54, 9.23it/s] 64%|######3 | 877/1377 [01:37<00:53, 9.29it/s] 64%|######3 | 878/1377 [01:37<00:53, 9.41it/s] 64%|######3 | 879/1377 [01:37<00:52, 9.40it/s] 64%|######3 | 880/1377 [01:37<00:53, 9.31it/s] 64%|######3 | 881/1377 [01:38<00:53, 9.31it/s] 64%|######4 | 882/1377 [01:38<00:52, 9.35it/s] 64%|######4 | 883/1377 [01:38<00:53, 9.32it/s] 64%|######4 | 884/1377 [01:38<00:52, 9.40it/s] 64%|######4 | 885/1377 [01:38<00:52, 9.30it/s] 64%|######4 | 886/1377 [01:38<00:52, 9.30it/s] 64%|######4 | 887/1377 [01:38<00:54, 8.99it/s] 64%|######4 | 888/1377 [01:38<00:53, 9.07it/s] 65%|######4 | 890/1377 [01:39<00:55, 8.84it/s] 65%|######4 | 892/1377 [01:39<00:52, 9.31it/s] 65%|######4 | 893/1377 [01:39<00:52, 9.25it/s] 65%|######4 | 894/1377 [01:39<00:52, 9.20it/s] 65%|######4 | 895/1377 [01:39<00:51, 9.33it/s] 65%|######5 | 897/1377 [01:39<00:51, 9.30it/s] 65%|######5 | 898/1377 [01:39<00:51, 9.34it/s] 65%|######5 | 899/1377 [01:40<00:51, 9.32it/s] 65%|######5 | 900/1377 [01:40<00:50, 9.38it/s] 65%|######5 | 901/1377 [01:40<00:50, 9.45it/s] 66%|######5 | 902/1377 [01:40<00:50, 9.43it/s] 66%|######5 | 903/1377 [01:40<00:49, 9.49it/s] 66%|######5 | 904/1377 [01:40<00:50, 9.33it/s] 66%|######5 | 905/1377 [01:40<00:50, 9.30it/s] 66%|######5 | 906/1377 [01:40<00:49, 9.43it/s] 66%|######5 | 908/1377 [01:40<00:48, 9.58it/s] 66%|######6 | 909/1377 [01:41<00:48, 9.61it/s] 66%|######6 | 910/1377 [01:41<00:48, 9.58it/s] 66%|######6 | 912/1377 [01:41<00:47, 9.83it/s] 66%|######6 | 913/1377 [01:41<00:49, 9.46it/s] 66%|######6 | 914/1377 [01:41<00:48, 9.52it/s] 66%|######6 | 915/1377 [01:41<00:48, 9.56it/s] 67%|######6 | 916/1377 [01:41<00:48, 9.56it/s] 67%|######6 | 917/1377 [01:41<00:47, 9.61it/s] 67%|######6 | 918/1377 [01:42<00:49, 9.31it/s]/Users/peltouz/Documents/GitHub/M2-Py-DS2E/hf/lib/python3.13/site-packages/torch/utils/data/dataloader.py:692: UserWarning: 'pin_memory' argument is set as true but not supported on MPS now, device pinned memory won't be used.

## warnings.warn(warn_msg)

##

## 0%| | 0/51 [00:00<?, ?it/s][A

## 10%|9 | 5/51 [00:00<00:01, 45.53it/s][A

## 20%|#9 | 10/51 [00:00<00:01, 39.48it/s][A

## 27%|##7 | 14/51 [00:00<00:00, 38.73it/s][A

## 35%|###5 | 18/51 [00:00<00:00, 35.84it/s][A

## 43%|####3 | 22/51 [00:00<00:00, 36.57it/s][A

## 51%|##### | 26/51 [00:00<00:00, 37.20it/s][A

## 59%|#####8 | 30/51 [00:00<00:00, 37.09it/s][A

## 69%|######8 | 35/51 [00:00<00:00, 38.11it/s][A

## 76%|#######6 | 39/51 [00:01<00:00, 38.62it/s][A

## 84%|########4 | 43/51 [00:01<00:00, 37.13it/s][A

## 92%|#########2| 47/51 [00:01<00:00, 37.02it/s][A

## 100%|##########| 51/51 [00:01<00:00, 37.34it/s][A

## [A{'eval_loss': 0.424141526222229, 'eval_accuracy': 0.8480392156862745, 'eval_f1': 0.8938356164383562, 'eval_runtime': 2.1727, 'eval_samples_per_second': 187.785, 'eval_steps_per_second': 23.473, 'epoch': 2.0}

## 67%|######6 | 918/1377 [01:44<00:49, 9.31it/s]

## 100%|##########| 51/51 [00:02<00:00, 37.34it/s][A

## [A/Users/peltouz/Documents/GitHub/M2-Py-DS2E/hf/lib/python3.13/site-packages/torch/utils/data/dataloader.py:692: UserWarning: 'pin_memory' argument is set as true but not supported on MPS now, device pinned memory won't be used.

## warnings.warn(warn_msg)

## 67%|######6 | 919/1377 [01:44<05:36, 1.36it/s] 67%|######6 | 920/1377 [01:44<04:11, 1.81it/s] 67%|######6 | 921/1377 [01:44<03:12, 2.37it/s] 67%|######7 | 923/1377 [01:44<02:04, 3.63it/s] 67%|######7 | 925/1377 [01:44<01:32, 4.86it/s] 67%|######7 | 926/1377 [01:45<01:22, 5.45it/s] 67%|######7 | 928/1377 [01:45<01:07, 6.62it/s] 67%|######7 | 929/1377 [01:45<01:04, 6.99it/s] 68%|######7 | 930/1377 [01:45<01:00, 7.43it/s] 68%|######7 | 931/1377 [01:45<00:57, 7.73it/s] 68%|######7 | 932/1377 [01:45<00:55, 8.08it/s] 68%|######7 | 933/1377 [01:45<00:52, 8.46it/s] 68%|######7 | 934/1377 [01:45<00:52, 8.44it/s] 68%|######7 | 935/1377 [01:46<00:50, 8.69it/s] 68%|######8 | 937/1377 [01:46<00:47, 9.24it/s] 68%|######8 | 938/1377 [01:46<00:47, 9.28it/s] 68%|######8 | 939/1377 [01:46<00:48, 8.97it/s] 68%|######8 | 940/1377 [01:46<00:48, 9.08it/s] 68%|######8 | 941/1377 [01:46<00:47, 9.27it/s] 68%|######8 | 942/1377 [01:46<00:46, 9.31it/s] 68%|######8 | 943/1377 [01:46<00:48, 8.97it/s] 69%|######8 | 944/1377 [01:46<00:48, 8.84it/s] 69%|######8 | 945/1377 [01:47<00:48, 8.95it/s] 69%|######8 | 947/1377 [01:47<00:46, 9.22it/s] 69%|######8 | 948/1377 [01:47<00:48, 8.79it/s] 69%|######8 | 949/1377 [01:47<00:47, 8.98it/s] 69%|######8 | 950/1377 [01:47<00:46, 9.10it/s] 69%|######9 | 951/1377 [01:47<00:46, 9.14it/s] 69%|######9 | 952/1377 [01:47<00:46, 9.21it/s] 69%|######9 | 953/1377 [01:47<00:46, 9.18it/s] 69%|######9 | 954/1377 [01:48<00:47, 8.93it/s] 69%|######9 | 955/1377 [01:48<00:47, 8.90it/s] 69%|######9 | 956/1377 [01:48<00:46, 9.14it/s] 69%|######9 | 957/1377 [01:48<00:45, 9.17it/s] 70%|######9 | 958/1377 [01:48<00:46, 9.00it/s] 70%|######9 | 959/1377 [01:48<00:46, 9.04it/s] 70%|######9 | 960/1377 [01:48<00:47, 8.77it/s] 70%|######9 | 961/1377 [01:48<00:46, 8.87it/s] 70%|######9 | 962/1377 [01:48<00:46, 8.91it/s] 70%|######9 | 963/1377 [01:49<00:47, 8.81it/s] 70%|####### | 964/1377 [01:49<00:46, 8.90it/s] 70%|####### | 966/1377 [01:49<00:44, 9.31it/s] 70%|####### | 967/1377 [01:49<00:43, 9.42it/s] 70%|####### | 969/1377 [01:49<00:43, 9.33it/s] 70%|####### | 970/1377 [01:49<00:44, 9.21it/s] 71%|####### | 971/1377 [01:49<00:44, 9.07it/s] 71%|####### | 972/1377 [01:50<00:44, 9.02it/s] 71%|####### | 973/1377 [01:50<00:44, 9.08it/s] 71%|####### | 974/1377 [01:50<00:44, 9.15it/s] 71%|####### | 975/1377 [01:50<00:43, 9.14it/s] 71%|####### | 976/1377 [01:50<00:43, 9.16it/s] 71%|####### | 977/1377 [01:50<00:43, 9.10it/s] 71%|#######1 | 978/1377 [01:50<00:45, 8.73it/s] 71%|#######1 | 979/1377 [01:50<00:45, 8.79it/s] 71%|#######1 | 980/1377 [01:50<00:43, 9.08it/s] 71%|#######1 | 981/1377 [01:51<00:44, 8.81it/s] 71%|#######1 | 982/1377 [01:51<00:43, 9.05it/s] 71%|#######1 | 983/1377 [01:51<00:43, 9.13it/s] 71%|#######1 | 984/1377 [01:51<00:42, 9.34it/s] 72%|#######1 | 985/1377 [01:51<00:43, 8.96it/s] 72%|#######1 | 986/1377 [01:51<00:43, 9.04it/s] 72%|#######1 | 987/1377 [01:51<00:42, 9.16it/s] 72%|#######1 | 988/1377 [01:51<00:42, 9.10it/s] 72%|#######1 | 989/1377 [01:51<00:42, 9.03it/s] 72%|#######1 | 991/1377 [01:52<00:40, 9.43it/s] 72%|#######2 | 992/1377 [01:52<00:40, 9.40it/s] 72%|#######2 | 994/1377 [01:52<00:40, 9.46it/s] 72%|#######2 | 995/1377 [01:52<00:40, 9.37it/s] 72%|#######2 | 996/1377 [01:52<00:40, 9.45it/s] 72%|#######2 | 997/1377 [01:52<00:40, 9.36it/s] 72%|#######2 | 998/1377 [01:52<00:41, 9.15it/s] 73%|#######2 | 1000/1377 [01:53<00:40, 9.41it/s] {'loss': 0.3412, 'grad_norm': 0.13441860675811768, 'learning_rate': 1.3725490196078432e-05, 'epoch': 2.18}